Empathetic testing

MP 72: What exactly are units, and how do we test them?

Note: This post was inspired by a talk at DjangoCon US 2023, but this post is not specific to Django.

I went to DjangoCon US 2023 in Durham this fall, and really enjoyed the talks I was able to attend in person. But there were a bunch more I wanted to see, and now that the recordings are out I’ve been working my way through the playlist.

One of the first talks I wanted to watch was Empathetic testing, by Marc Gibbons. It really resonated with my thoughts about testing, so I wanted to share some of his perspectives, and offer some reactions to the points he made.

What is a unit, anyway?

If you’ve dealt with testing at all, you almost certainly started with unit testing. Before reading further, take a moment and ask yourself what a unit is. Try to commit to a specific answer before reading on.

Despite what many people probably think, the term unit in unit testing doesn’t have a concrete definition that everyone agrees on. I want to keep this post short, so I’m just going to share my own working definition:

A unit is a block of code that’s worth testing in isolation.

Notice the phrasing here, block of code. I would guess that many people would define a unit as a function. But I’ve seen plenty of functions that are clearly larger than what I think of as a unit.1

Signs of a broken test suite

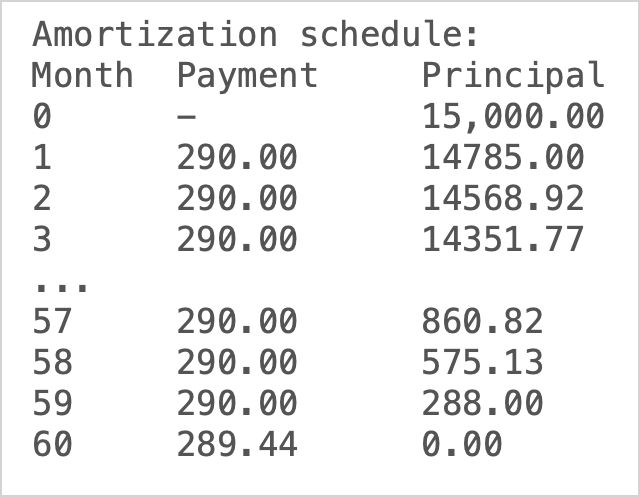

I enjoy watching tech talks because of all the real-world stories people share to put their bigger points in context. In this talk, Marc tells a story of a really broken test suite. The context was software for a financial firm, and the example he focused on was a section of code that calculated the amortization table for paying off a loan.2

Marc’s team wanted to start using NumPy for some of their financial calculations. After some refactoring, the existing tests all passed. However, the site was broken for end users. They fixed the code, so the site worked again for end users. But now the tests failed. This is exactly the opposite of how tests should work! The test were passing when the site was broken, but failed when the site was working.

It’s important to note that this was not a terrible test suite. The test suite had served its purpose for some time before this refactoring work. An imperfect test suite that’s run regularly is much better than a perfect test suite that hasn’t been written yet.

Avoid implementation bias

When Marc was discussing the broken test suite, he described it like this:

Our tests aren’t actually testing how things work; they’re testing the way the thing was built.

While I’ve been aware of this kind of issue with tests, I hadn’t heard it named before. Marc’s larger point was to avoid implementation bias. A unit test should verify the behavior of a unit. It’s rarely important, or even valuable, to verify the implementation of a unit. Yet it’s easy to fall into that approach to writing tests sometimes if you’re not aware of the problems it can create.

The test suite he was working on broke because some of the tests were verifying the behavior of units, in a way that was overly dependent on the implementation of those units. A brittle test is technical debt. It’s helpful only as long as the current implementation is in place. A test written with implementation bias hinders refactoring, rather than supporting the evolution of the codebase.

Empathetic testing

Marc had an interesting take on how to address this kind of brittleness in tests. Rather than just directing people to test behaviors in a way that’s independent of the implementation, he suggested that people should approach testing from an empathetic perspective. He encouraged people to think of the future maintainers of the projects we work on, not just from the perspective of imagining them running our test suite, but from the perspective of realizing that they’ll probably be able to write better code than we’re currently writing.

Some people laugh at these kinds of suggestions. They might say things like, “It’s just code, there’s no feelings here!” But programming is full of feelings. We have to feel a bit of ego to write working code in the first place.

Every time we sit down at a keyboard, there’s some part of us that says, “I am the one who can solve this problem!” We need some pride and hubris in order to start working on a project. Marc’s point was that another voice should remind us, “Someone may well come along and improve this implementation at some point.” Our tests should server ourselves when we’re writing them, but they should also serve those future developers who see the problem even more clearly than we do. (And sometimes, we are that future contributor.)

Conclusions

I have a number of takeaways from watching this talk, and reflecting on the points that were brought up:

- Testing is almost always about verifying behaviors, not implementations.

- We need to be thoughtful about the tests we write, even when writing small unit tests. We can ask ourselves, will this test support refactoring, or hinder refactoring?

- Think clearly about the “API” of your smallest pieces of code. If a function’s external usage is consistent and stable, we can write unit tests that interact with the function in a stable and non-brittle way. This holds true even as the code inside the function gets refactored.

Testing is not separate from programming. Writing, using, and understanding a test suite will help you understand your code better, and think more carefully about the overall structure of your project as well.

Interestingly, your working definition of a unit can help you decide how big your functions should be. If a block of code is worth testing, that block of code probably deserves to be in its own function. Similarly, if a block of code doesn’t need its own unit test, that block might not need to be in a function of its own. ↩

If you’re unfamiliar with this term, an amortization table is the list of payments someone needs to make in order to pay off a loan on time.

As a math teacher, this was one of my favorite things to teach students. Give them a loan amount, say $15,000 for a vehicle they want to buy. Give them an APR, say 6%. Finally, given them a term length, such as 60 months. Ask them how much they’ll need to pay each month in order to pay off the loan in 60 months.

You can do this with a spreadsheet. I love showing people how to do this, because it pulls back the curtain about how banks and other financial institutions come up with these kinds of numbers. Anyone who can analyze a loan like this is less likely to be taken advantage of financially. The software Marc was talking about automates these kinds of calculations. ↩