Testing a book's code

MP 76: Testing code for a book is different than testing a standard programming project, but brings up many relevant issues.

Note: This is the first post in a seven-part series about testing.

Maintaining a technical book is more challenging than many people realize. If you want your book to stay relevant, a technical book is never really finished. You have to keep an eye on the code to make sure it hasn’t gone out of date. When that inevitably happens, you need a plan for communicating updates to readers, and a plan for how to approach new editions.

Just as in more traditional software projects, automated testing can (and should) play a significant role in making sure a book’s code stays up to date. In this series I’ll share my current approach to testing the code for Python Crash Course. My workflow has evolved significantly over the course of three editions, and almost ten years now. There’s a lot of overlap between testing traditional software projects and testing code for a book, but there are interesting differences as well.

This series will have obvious relevance to current authors, and people who are considering writing a technical book. But even if you have no interest in writing, there are many elements of this work that are relevant to testing in general. For example, this series will touch on all of the following:

- How to plan a testing suite that meets your actual needs, rather than one that simply exercises as much code as possible;

- How to use parametrization to write a group of similar tests efficiently;

- How to test visual output such as plots;

- How to test programs that depend on user input;

- How to test programs that depend on random values;

- How to address cross-OS issues in testing;

- How to run tests in parallel, to speed up your test suite;

- How to use test artifacts to make debugging and ongoing development easier.

While the context centers around testing code from a book, the variety of challenges that come up will address issues of relevance to people interested in testing a wide variety of projects.

Series overview

For some series on Mostly Python, I draft each post as the series evolves. For others, like this one, I’ve planned out all the posts ahead of time. This series will consist of seven parts:

Part 1: How do you approach setting up a test suite? What kinds of things should you think about, and what decisions should you make, before writing any test code?

Part 2: How do you test a large set of basic Python scripts? How can you use parametrization to do this kind of testing efficiently? (MP #77)

Part 3: How do you test a game project? In general, how do you test a project that has visual output, and depends on user interactions?

Part 4: How do you test code that generates image-based data visualizations with Matplotlib? How do you validate static visual output?

Part 5: How do you test code that generates HTML-based visualizations, when the HTML is slightly different on every test run?

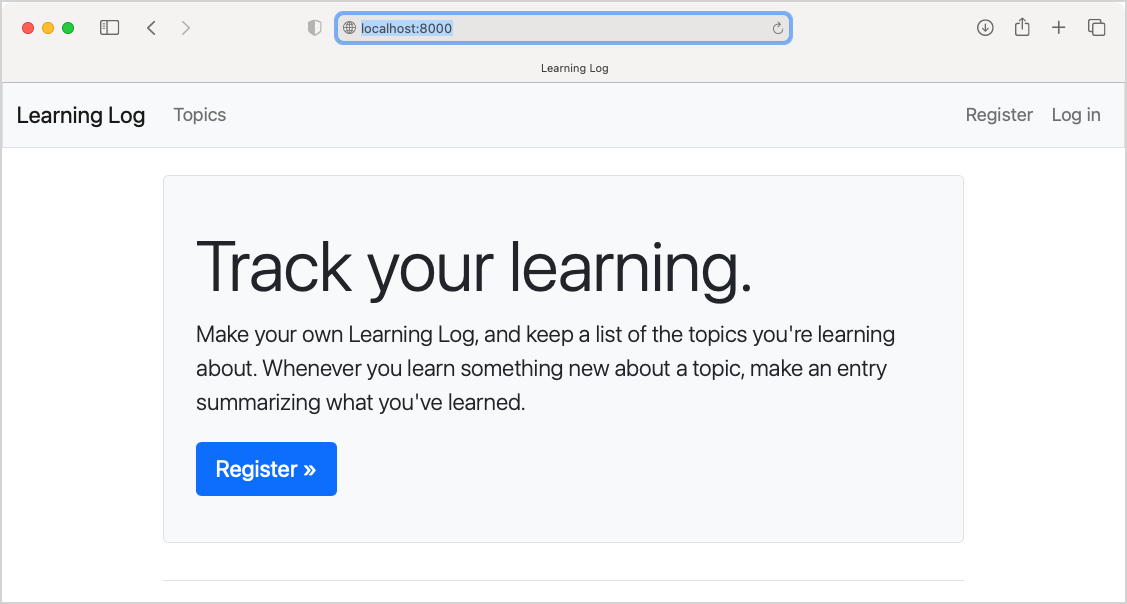

Part 6: How do you test a Django project, with a focus on external behaviors?

Part 7: What are the takeaways from all this testing work?

If you’ve been thinking about testing but haven’t written a test suite of your own yet, I think you’ll find a number of things in here that are helpful to your own work. If you have started to write tests, I believe you’ll find some aspects that look familiar, and some ways of doing things that help you think differently about your own testing routines.

A good testing mindset

I wrote in an earlier post that people should consider writing integration tests before unit tests, for untested projects and projects that are under rapid development. Chris Neugebauer gave a talk at PyCon Australia in 2015 with a similar message. There’s a great quote from that talk that has stayed with me:

If you have any code that can be run at all, there is a way to write tests for it.

A book is full of code. That code can be run, so there must be a way to write tests for it.

A brief history of testing Python Crash Course

When Python Crash Course first came out in 2015, I didn’t have any automated tests written for it. I posted all the code for the book online, and when readers pointed out inevitable mistakes I updated the repository, and made notes to fix those mistakes in the book the next time it went out for a new print run. With this approach, the book steadily got better over time.

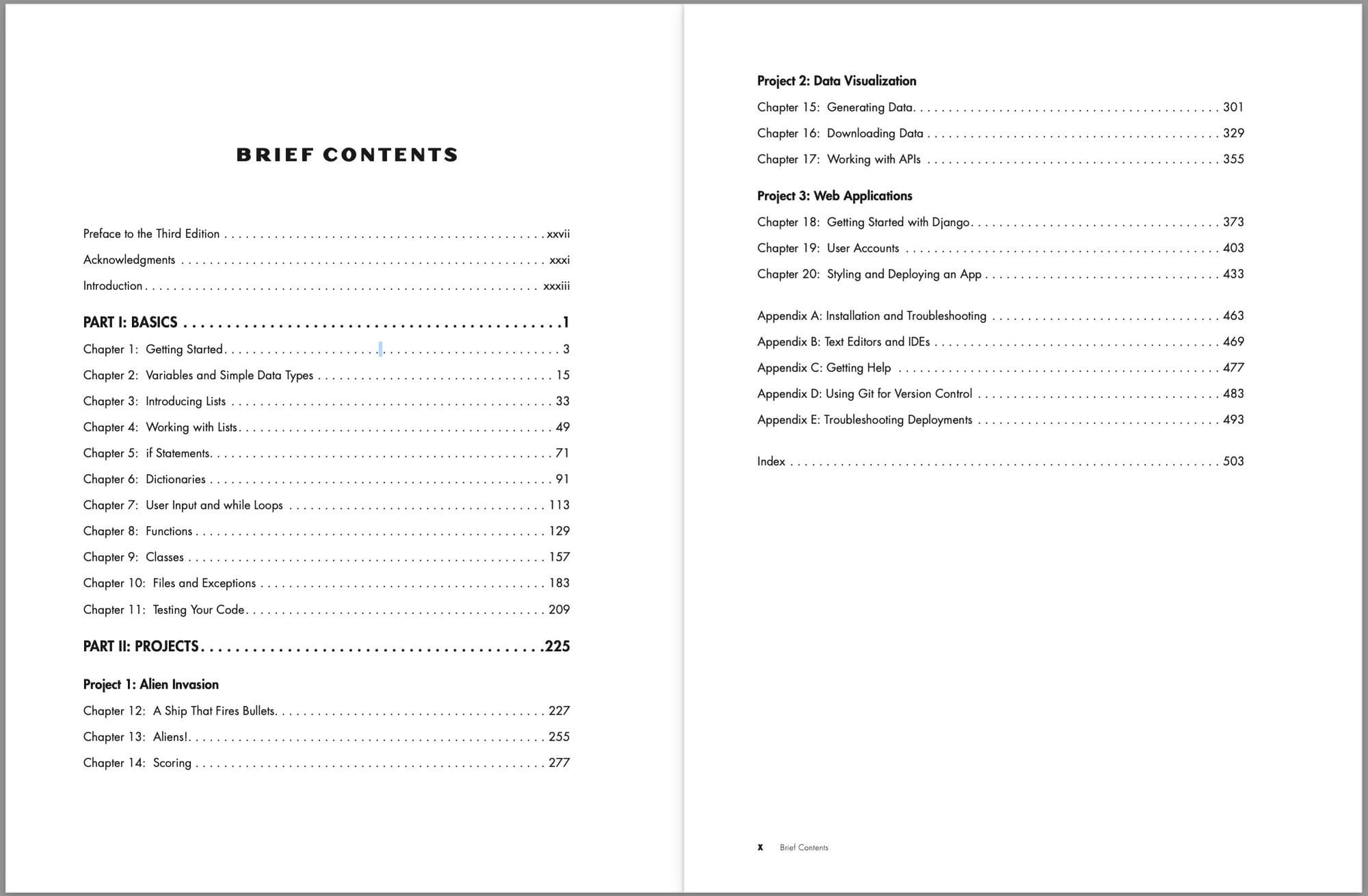

Python Crash Course is really two books in one. The first half of the book is an introduction to the basics of Python and the fundamentals of programming. The second half of the book is a walk-through of three different projects: a video game, a series of data visualizations, and a web app that goes all the way through deployment.

This means the book’s longevity depends not only on how Python evolves, but also on the ongoing development of third party libraries for writing games, data visualizations, and web apps. Testing any one of these isn’t always straightforward; testing all of these in one comprehensive test suite is an interesting challenge.

Most of my early testing work for the book was manual. When a new version of Python was released, or a new version of any of the libraries the book depends on was released, I’d manually set up a virtual environment and run the code for each of the projects in the book. This worked, but it wasn’t particularly efficient. There were definitely a few times I didn’t do this work ahead of time, and just crossed my fingers that a new version of a library wouldn’t break the book’s code.

For the second edition of the book, which came out in 2019, I tried to write a proper test suite for the book. I wrote code that walked the entire repository for the book’s code, and tried to run all the files in the repository. That sort of worked, but it was a clunky mess of trying to exclude files that didn’t really need to be tested, or weren't testable in a straightforward way.

The third edition came out in January of 2023, and I’ve learned a lot more about testing since the previous edition came out. This time I laid out the goals I want to achieve with a test suite, and set out to build a set of tests that would do exactly that. Instead of walking through the repository and trying to test every file, I wrote a test suite that specifies exactly which files should be tested, and how. I’m really pleased with the result.

An ideal test suite

An ideal test suite would let me do the following:

Run all the most significant programs in the book. We often hear about the ideal of 100% test coverage, but that’s just not necessary with a book. For example I have folders called partial_programs/, which show all the versions of each file as it’s being developed in the text of the book. If the final version of each of these programs works, I’m confident enough in all the partial versions.

Run the test suite against different versions of Python, including release candidates. This is really important; I want to know that the code for the book will work on the latest version of Python. I want to test against release candidates of new versions, rather than waiting for the full public release of each new version.

Run the test suite against any version of any library used in the book. This is critical as well. The book covers pytest, Pygame, Matplotlib, Plotly, Requests, and Django. All these libraries have different lifecycles, and I need an easy way to test the projects in the book against any version of these libraries, including release candidates.

Drop into an active environment for a specific project. When maintaining the book, it gets quite tedious to set up an environment for a project in order to run it and look at certain aspects of that project. An ideal test suite will let me run the tests, and then drop into an active environment for any project in the book. This goes beyond testing; it’s testing that leaves artifacts behind that I can easily work with in a variety of ways. This is especially helpful when readers report possible issues, and the tests don’t necessarily cover the issue, but they do set up an environment where it would be easy to check out that issue.

Runs on macOS, Windows, and Linux. Readers use all these platforms, so I need to be able to test on each of these platforms as well.

Ideal test commands

It can be helpful to imagine the kinds of commands you’d like to be able to run if your ideal test suite already existed. These are the kinds of commands I’d like to be able to run for this project:

$ pytest tests/

$ pytest tests/test_django_project.py

$ pytest tests/test_django_project.py --django-version 5.0a1Having a sense of what kinds of commands we want to run points us toward the testing files we should start to write, and the kinds of features we should be looking to build into the test suite. Here I want an overall tests/ directory, and I want individual test files for each of the major projects in the book. I also want to be able to include CLI arguments that let me specify exactly which version of a library to use for any given test run.

The overall plan

In most software projects the test suite lives alongside the project’s code, but that doesn’t quite work for this kind of testing. The main repository for the book is really for readers; trying to include a full author-focused testing suite in that repository is not ideal. I also don’t want to maintain these tests in public. They sometimes highlight issues that I recognize as minor, that might look like more significant breakage to people who don’t have the same perspective on the code and the purpose it serves.

When making an overall plan for the test suite, I also have to wrestle with one aspect of testing code for a book that’s different from testing standard projects. In a standard project, you’re somewhat free to change the code to make it more testable; this is one of the benefits of starting a test suite early on in a project. But a book’s code is “frozen”. It’s printed in physical books, and it’s developed with a higher priority on pedagogical goals than on performance or testability. So we need to come up with a way of testing that deals with all the code as it is. This isn’t entirely unique to testing code from a book. You may need to write tests for a project that includes code that you or your team don’t have direct control over.

Here’s the approach I came up with:

- Make a private fork of the book’s public repository.

- Add any files I want to this repository, to support testing.

- Don’t remove or modify any file that’s in the public repository. If I need to change something for testing purposes, make a copy of that resource and modify it during the test run.

- When I make changes in the book’s code for new printings, pull those changes into this repository as well.

- Never push anything from the test repository to the upstream public repository.

For this series, I’m going to make a public version of the test repository, which I’ll then archive as a demo when the series is complete.

Conclusions

Writing a test suite for the code in a technical book presents a unique challenge, especially if the book covers a variety of different topics, using a number of third-party libraries. However, addressing these unique challenges brings up ideas that are useful to people testing a wide range of projects.

If you’re developing a test suite for a project of your own, take a moment to step back and ask yourself what your larger goals are for the test suite. Don’t fall into the trap of just writing a bunch of tests that exercise most of your code. What do you want to get out of your test runs? What do you want to be able to do immediately after a test runs? How do you want to use your tests as a part of your development workflow? Tests don’t have to be something that’s only dealt with after you write your code; they can be something that are useful while actively developing your code.

In the next post we’ll write a set of tests that cover all the basic programs in the book. We’ll go through the process of writing the first test, making sure it can fail and then making sure it passes. We’ll use parametrization to quickly generate a large set of similar tests. We’ll also start to use fixtures to implement some features of the test suite that will be used throughout all the test modules.

Note: If you have questions or thoughts to share about testing, please add a comment below, or feel free to reply to this email and I’ll be happy to respond.