Testing a book's code, part 6: Testing a Django project

MP 83: Testing a simple but nontrivial Django project, from a reader's perspective.

Note: This is the sixth post in a series about testing.

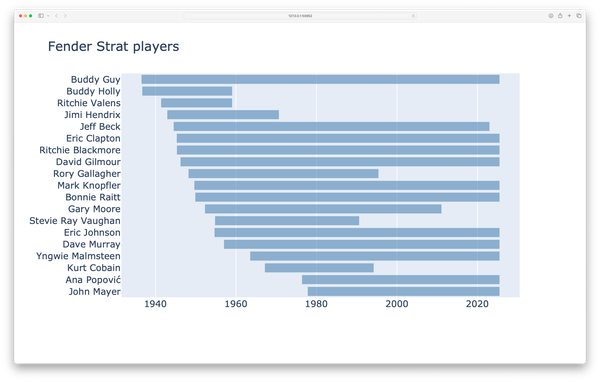

The final project in Python Crash Course is a simple, but nontrivial Django project. It’s called Learning Log, and it lets users create their own online learning journals.

When testing a typical Django project, the focus is usually on testing the internal code in the project. I’m not really interested in that kind of testing here. Instead, I want to know that this project works on new versions of Django, which come out every 8(!) months. That’s a quick lifecycle, but it’s manageable because Django takes stability quite seriously.

In this post we’ll develop a single test function for the Learning Log project. It will create a copy of the project, build a separate virtual environment just for testing this project, and test whether the overall project functions as it’s supposed to.

Setting up the test environment

A Django project really needs its own virtual environment. I want this test to reflect how readers run the Learning Log project, so the test is going to make a fresh environment just for this project on every run. It’s then going to take the same actions a reader would when interacting with the project.

Copy project files to temp directory

Here’s the first part of test_django_project.py:

import os, shutil, subprocess

from time import sleep

from pathlib import Path

import requests

import utils

from resources.ll_e2e_tests import run_e2e_test

def test_django_project(tmp_path, python_cmd):

"""Test the Learning Log project."""

# Copy project to temp dir.

src_dir = (Path(__file__).parents[1] / "chapter_20"

/ "deploying_learning_log")

dest_dir = tmp_path / "learning_log"

shutil.copytree(src_dir, dest_dir)

# All remaining work needs to be done in dest_dir.

os.chdir(dest_dir)

# Build a fresh venv for the project.

cmd = f"{python_cmd} -m venv ll_env"

output = utils.run_command(cmd)

assert output == ""We first copy the project files to a folder called learning_log/ in the temp directory. We then switch to this folder for the rest of the test run. In that folder, we create a new virtual environment. In the book I called that ll_env, so that’s what I’m using here.1 There shouldn’t be any output after successfully making a new virtual environment, so we make an assertion that there was no output at this point. We’ll do a number of checks like this to make sure the project’s virtual environment is correct, and distinct from the virtual environment used for the overall test suite.

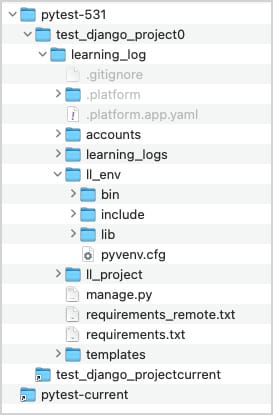

Looking in the temp directory, we can see that the project files have been copied, and the virtual environment has been created:

Checking the new virtual environment

It’s really important to know that the project’s test environment is set up correctly. So we’ll run another check:

def test_django_project(...):

...

# Get python command from ll_env.

llenv_python_cmd = (dest_dir

/ "ll_env" / "bin" / "python")

# Run `pip freeze` to prove we're in a fresh venv.

cmd = f"{llenv_python_cmd} -m pip freeze"

output = utils.run_command(cmd)

assert output == ""We need the path to the new virtual environment’s Python executable, which we assign to llenv_python_cmd. We then call pip freeze with this interpreter, and verify there’s no output. If we were accidentally using the overall test environment for the project, pip freeze would generate a bunch of output and this assertion would fail. You can check this yourself by replacing llenv_python_cmd with python_cmd in the call to pip freeze; if you do this, the test will fail.

Installing requirements

Now we’re ready to install the requirements in the new venv:

def test_django_project(...):

...

# Install requirements, and requests for testing.

cmd = f"{llenv_python_cmd} -m pip install -r requirements.txt"

output = utils.run_command(cmd)

cmd = f"{llenv_python_cmd} -m pip install requests"

output = utils.run_command(cmd)

# Run `pip freeze` again, verify installations.

cmd = f"{llenv_python_cmd} -m pip freeze"

output = utils.run_command(cmd)

assert "Django==" in output

assert "django-bootstrap5==" in output

assert "platformshconfig==" in output

assert "requests==" in outputWe install everything from the Django project’s requirements.txt file. We also install Requests, so we can test the local project once it’s set up. After installation, we call pip freeze again to verify that everything we needed was installed.

Migrate and check

Now we can run migrations:

def test_django_project(...):

...

# Make migrations, call check.

cmd = f"{llenv_python_cmd} manage.py migrate"

output = utils.run_command(cmd)

assert "Operations to perform:... OK" in output

cmd = f"{llenv_python_cmd} manage.py check"

output = utils.run_command(cmd)

assert "System check..." in outputWe call manage.py migrate, and manage.py check. These are all steps that readers take with the project, and after each step we make an assertion about the expected output. When this test fails, we want to know exactly what step didn’t work. (Note that the strings in the assert statements shown here are truncated.)

Running the project

In local development, people often use Django’s built-in development server like this:

$ python manage.py runserver

...

Starting development server at http://127.0.0.1:8000/

Quit the server with CONTROL-C.In the simplest usage of the development server, the server process continues to run in the foreground until we quit the server by pressing Ctrl-C. We’re going to use the development server for testing, but we’ll need to manage it carefully to make sure it starts up and shuts down properly.

Starting the development server

Here’s the code that starts the server, and verifies that we can connect to it:

def test_django_project(...):

...

# Start development server.

# To verify it's not running after the test:

# macOS: `$ ps aux | grep runserver`

#

# Log to file, so we can verify we haven't connected to a

# previous server process, or an unrelated one.

# shell=True is necessary for redirecting output.

# start_new_session=True is required to terminate

# the process group.

runserver_log = dest_dir / "runserver_log.txt"

cmd = f"{llenv_python_cmd} manage.py runserver 8008"

cmd += f" > {runserver_log} 2>&1"

server_process = subprocess.Popen(cmd, shell=True,

start_new_session=True)I want to make sure that whenever the test run starts a server process, that process gets terminated by the time the test suite is finished running. I added a longer comment than usual to remind myself how to look for these ghost server processes. I also put in a reminder of why I’m writing the server output to a log file, and why the call to Popen() has the arguments that are used here. I usually leave comments out of listings in newsletter posts, but these are exactly the kinds of comments that are helpful to see from time to time.

We’ll need to verify that we’ve connected to the correct server. We want to make sure we haven’t accidentally connected to a server running for a different project, or a stale process from a previous test run. There are a bunch of ways to do this. The simplest cross-platform solution I’ve found is to redirect runserver’s output to a log file, which we can then check while the server process is still running. If you want to read from stdout or stderr while a process is running, you’d need to use async or threaded code. I’d like to avoid that if possible, and writing to a log file is much simpler in this testing scenario. It also generates another testing artifact we can make assertions against, and inspect manually after a test run if we want.2

Here’s the form of the runserver command we’re going to run:

$ python manage.py runserver 8008 > runserver_log.txt 2>&1I often have a project running on port 8000, the default port that runserver uses, so I’m testing on port 8008. This command redirects both stdout and stderr to the specified log file.

When we start the server process, we need to maintain a connection to the process until we’re finished testing. To do this, we need to use subprocess.Popen() instead of the simpler subprocess.run(). Redirecting the log output to a file requires the shell=True argument. The start_new_session=True is required to terminate all the child processes that are spawned by the main server process.

Connecting to the server

After starting the server, we try to connect to it:

def test_django_project(...):

...

# Wait until server is ready.

url = "http://localhost:8008/"

connected = False

attempts, max_attempts = 1, 50

while attempts < max_attempts:

try:

r = requests.get(url)

if r.status_code == 200:

connected = True

break

except requests.ConnectionError:

attempts += 1

sleep(0.2)It’s likely that an immediate request to the server would fail, because the server needs some time to start up. We make a request every 0.2s, and bail after 50 attempts (10 seconds).

Verifying the connection

After exiting the connection loop, we need to make sure we’re connected to the server process that was just started:

def test_django_project(...):

...

# Verify connection.

assert connected

# Verify connection was made to *this* server, not

# a previous test run, or some other server on 8008.

# Pause for log file to be written.

sleep(1)

log_text = runserver_log.read_text()

assert "Error: That port is already in use" not in log_text

assert "Watching for file changes with StatReloader" in log_text

assert '"GET / HTTP/1.1" 200' in log_textWe assert that the call to requests.get() was successful, and the loop didn’t end by simply failing to connect. But that’s not enough; maybe there’s another server running on port 8008. Even if you don’t have an open project running on that port, a previous test run that didn’t terminate properly could still be active. We don’t want to run the current tests against resources from a previous test run!

To make sure we’re connected to the server process we just started, we make sure the “port already in use” error message is not in the server log. We pause before making this check, because it can take a moment for the log file to be written reliably. We also assert that the development server has given its usual message about watching for changes, and we make sure there’s an entry for the successful connection.

This test will fail if the server was unable to connect to the port. This means the overall test will fail if we successfully connect to any other server that happens to be active on port 8008. The only way to continue the test is if we successfully connected to a server listening on 8008, and we see a corresponding log entry in the server log.

When you run the test at this point, the server will continue running in the background because we’re not terminating it. That’s intentional, because I wanted to verify that this test will fail when another server is running, especially if it’s serving the data we’re looking for. We’ll add code to terminate the server in just a moment.

I will admit it took me a fair bit of trial and error, and reading in order to come up with a reliable workflow here. What I’ve come up with to this point gives me confidence in the results of this test.

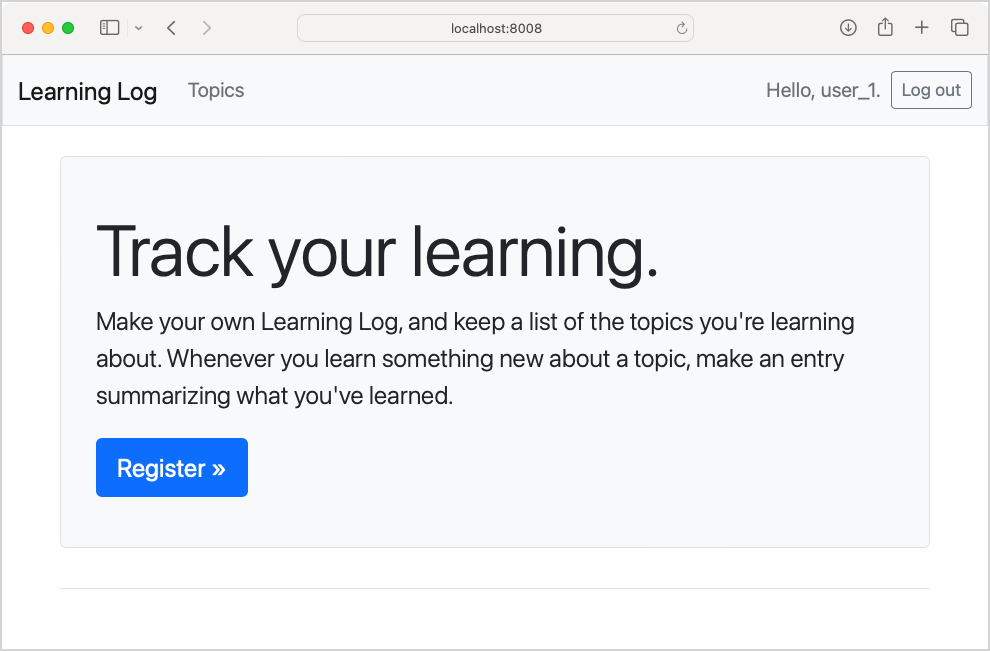

While the server is running, you can view the project in the browser. Now that we know the test suite can run the project, we’ll test the project and then terminate the server process.

Testing the running project

A few years ago I wrote a script to test this project after deploying it to a hosting service such as Platform.sh or Heroku. It uses Requests to make a series of calls that access a few anonymous pages, and then creates an account and enters a little data. The goal of this short test script is to make sure it’s the actual project that’s being served and not a generic placeholder page, and to make sure the database is accessible and functioning as well. I want the test script to exercise the project as a whole, not just verify that a few pages return 200 status codes.

Here’s a snippet of the functionality test script:

print("\nTesting functionality of deployed app...")

# --- Anonymous home page ---

print(" Checking anonymous home page...")

# Note: app_url has a trailing slash.

app_url = sys.argv[1]

r = requests.get(app_url)

assert r.status_code == 200

assert "Track your learning." in r.text

assert "Log in" in r.text

...This test turns out to be useful when validating a local version of the project as well. Instead of passing the script a remote URL, you just pass it the local URL where the project is running. In this case, that would be http://localhost:8008/.

This test script was one long series of requests and assertions, without any functions or class structure. It doesn’t look like well-structured code, but it was a clear mimic of the workflow that someone using the project might follow. To make it possible to run the script from the test suite, I indented the entire script and put it in a function called run_e2e_test().

The functionality test script is in the tests/resources/ folder. Now that the test function has started the development server, we can pass its URL to run_e2e_test(). Here’s the code to do that:

def test_django_project(...):

...

try:

run_e2e_test("http://localhost:8008/")

except AssertionError as e:

raise e

finally:

# Terminate the development server process.

# There will be several child processes,

# so the process group needs to be terminated.

print("\n***** Stopping server...")

pgid = os.getpgid(server_process.pid)

os.killpg(pgid, signal.SIGTERM)

server_process.wait()

# Print a message about the server status before exiting.

if server_process.poll() is None:

print("\n***** Server still running.")

print("***** PID:", server_process.pid)

else:

print("\n***** Server process terminated.")The function run_e2e_test() contains a bunch of assertions about the pages that are requested. If any of these assertions fail, the test suite will exit and none of the code that follows in the test function will run. But, we need to terminate the server whether the e2e test is successful or not.

This means the call to run_e2e_test() must be placed in a try block. We catch the AssertionError that might be raised, and simply re-raise it. What we really care about is the finally block.

If you haven’t used a finally block before, it runs regardless of whether an exception was caught or not. It’s typically used for cleanup work that must be done in all cases, whether an exception occured or not. In this case we need to terminate the server process. The code here identifies the group of processes that were spawned to run the server, and sends a termination signal to all of them. To help make sure cleanup was done properly, we poll the server process to see if it’s still active. If it is, we print the process ID. This works on macOS; I’m going to deal with Windows a little later.

user_1 is logged in. This proves that the project works, but it also means it’s easy to jump into a working version of the project. This is useful in troubleshooting, and even in ongoing development work.Testing your test code

There’s a lot going on in this test! It’s a test that does a bunch of setup work, and then calls other testing code. Before moving on, I want to know that the test is reliable. Will it pass meaningfully when the Learning Log project is working? Will it fail reliably when one of the e2e tests fails? Will the server reliably stop in all these cases?

I ran this code many times, in many ways, intentionally putting errors into the project when I wanted to see what a failure looks like. At some points in development, an error in the Learning Log project would cause an assertion in the e2e test to fail, but the overall test suite would still pass. That’s not uncommon in testing, and it’s really important to catch that before starting to trust the results of a test suite.

Here’s what a passing test looks like at this point:

$ pytest tests/test_django_project.py -qs

Testing functionality of deployed app...

Checking anonymous home page...

Checking that anonymous topics page redirects to login...

Checking that anonymous register page is available...

Checking that anonymous login page is available...

Checking that a user account can be made...

Checking that a new topic can be created...

Checking topics page as logged-in user...

Checking blank new_topic page as logged-in user...

Submitting post request for a new topic...

Checking topic page for topic that was just created...

Checking that a new entry can be made...

Checking blank new entry page...

Submitting post request for new entry...

All tested functionality works.

***** Stopping server...

***** Server process terminated.

.

1 passed in 10.00sWe can see from this output exactly what e2e tests were run against the project. A number of anonymous pages are rendering correctly. A user account can be created, and some pages look correct for a logged-in user. The user can create some data, and then see that data after it’s been saved to the database. We can also see that the server process appears to have been terminated.

To check whether a mistake in the project causes an appropriate failure, I wanted one of the assertions in the middle of the e2e tests to fail. I entered a typo in the login page template:

{% block page_header %}

<h2>Loooooog in to your account.</h2>

{% endblock page_header %}Instead of Log in to your account, the page will read Loooooog in to your account.

Here’s the results of a test run with this error in place:

$ pytest tests/test_django_project.py -qs

Testing functionality of deployed app...

Checking anonymous home page...

Checking that anonymous topics page redirects to login...

***** Stopping server...

***** Server process terminated.

F

def run_e2e_test(app_url):

...

# --- Anonymous topics page ---

print(" Checking that anonymous topics page...")

...

assert r.status_code == 200

> assert "Log in to your account." in r.text

E AssertionError

1 failed in 8.49sThis was an interesting failure to check. I expected the test for the anonymous login page to fail. I was forgetting that looking at some pages while not logged in would redirect to the login page. This is perfect; an error in the project leads to a test failure that gives insight into what’s wrong with the current state of the project.

Testing different versions of Django

As with the other libraries used in the book, I want to be able to run the Learning Log test against different versions of Django. That’s not quite as simple as it was with each of the other libraries, because a Django project typically has its own environment.

A common need for me in testing this project is to unpin all requirements, and just install the latest version of every requirement. Here’s the project’s requirements.txt file as it currently stands in the public repository:

asgiref==3.5.2

beautifulsoup4==4.11.1

Django==4.1b1

django-bootstrap5==21.3

platformshconfig==2.4.0

soupsieve==2.3.2.post1

sqlparse==0.4.2These are the versions that were current at the time I wrote the book, but I don’t tell people to install these specific versions. Instead I’ve written the book so that every reader installs the latest version at the time they’re working through the book. They run commands like pip install django, and then end up with whatever version is current at the time.

So, when I’m testing I often want to unpin the main requirements, and install exactly what a reader working through the book today would install. I also want to be able to test against specific versions. To support these testing needs, here are the kinds of test commands I want to be able to run:

$ pytest tests/test_django_project --django-version unpinned

$ pytest tests/test_django_project --django-version 4.2.6

$ pytest tests/test_django_project --django-version 4.1.12

$ pytest tests/test_django_project --django-version 5.0a1I’ll focus on the unpinned version first, because that’s needed for the others as well.

I’ve added the CLI arg in conftest.py. Here’s the section of test_django_project() that unpins the requirements:

def test_django_project(request, tmp_path, python_cmd):

...

# All remaining work needs to be done in dest_dir.

os.chdir(dest_dir)

# Unpin requirements if appropriate.

django_version = request.config.getoption("--django-version")

if django_version is not None:

print("\n***** Unpinning versions from requirements.txt")

req_path = dest_dir / "requirements.txt"

contents = "Django\ndjango-bootstrap5\nplatformshconfig\n"

req_path.write_text(contents)

# Build a fresh venv for the project.

...Notice that these changes are not at the end of the test function. This code needs to placed immediately after the call to os.chdir(), and before the new virtual environment is created. We add request to the list of parameters, so we can check whether —-django-version was set. We check the version and unpin requirements after copying the files to the temp directory. We call request.config.getoption(), which will either return None, "unpinned", or a specific version number.

We’re going to unpin the requirements any time the option —-django-version has been used. To unpin the versions, we simply rewrite the requirements.txt file. We name the three main dependencies: Django, django-bootstrap5, and platformshconfig. All other entries in requirements.txt are dependencies of these three libraries.

This is enough to run the unpinned test. However, I’d like to show a summary of what was used for testing. Here’s the code I added to the end of test_django_project() to provide a helpful summary:

def test_django_project(...):

...

# Show what versions of Python and Django were used.

cmd = f"{llenv_python_cmd} -m pip freeze"

output = utils.run_command(cmd)

lines = output.splitlines()

django_version = [l for l in lines if "Django" in l][0]

django_version = django_version.replace("==", " ")

cmd = f"{llenv_python_cmd} --version"

python_version = utils.run_command(cmd)

msg = "\n***** Tested Learning Log project using:"

msg += f"\n***** {python_version}"

msg += f"\n***** {django_version}"

print(msg)We call pip freeze, and get the version of Django that was actually installed to the virtual environment in the temp directory. This is better than just reading from a requirements file. This verifies what version was actually used for running the test, rather than just repeating what was supposed to be installed.

The test suite already shows the version of Python that was used, but since we created a new virtual environment just for this test it’s reassuring to call python -—version using the interpreter that’s active in the temp directory. This can help make sure we’re not accidentally creating the temporary virtual environment using the system Python, for example.

Here’s the output running the test against an unpinned set of requirements:

$ pytest tests/test_django_project.py -qs --django-version unpinned

***** Unpinning versions from requirements.txt

Testing functionality of deployed app...

Checking anonymous home page...

...

All tested functionality works.

***** Stopping server...

***** Server process terminated.

***** Tested Learning Log project using:

***** Python 3.11.5

***** Django 5.0.2

.

1 passed in 10.81sThis is really clear output. I can be confident that the Learning Log project works with the latest versions of all requirements.

Testing specific versions

With that work done, testing specific versions only involves modifying the Django version when we write the new requirements file. That’s a small change to the block that unpins requirements:

def test_django_project(...):

...

if django_version is not None:

print("\n***** Unpinning versions from requirements.txt")

req_path = dest_dir / "requirements.txt"

contents = "Django\ndjango-bootstrap5\nplatformshconfig\n"

if django_version != "unpinned":

django_req = f"Django=={django_version}"

contents = contents.replace("Django", django_req)

req_path.write_text(contents)

# Build a fresh venv for the project.In this block, if django_version is anything other than "unpinned", we add the version specification to the entry for Django.

Now we can test any version:

$ pytest tests/test_django_project.py -qs --django-version 4.2.10

...

***** Tested Learning Log project using:

***** Python 3.11.5

***** Django 4.2.10

.

1 passed in 8.82sThis is especially helpful for running tests against release candidates.

Running on Windows

A project that creates a new virtual environment on the fly, activates it, starts a server in a subprocess, runs some tests, and then terminates spawned processes is quite likely to need some work to run on multiple OSes.

I won’t walk through the whole process here, but my approach to making this test work on Windows was to refactor the test function more than I would normally refactor a test. This test fails more often than most, because it’s really a series of smaller tests. There are many reasons for structuring this as one long test. It’s hard to make individual tests run in a specific order, and there’s a lot of setup work I don’t want to repeat. Most importantly, failures with this approach give me exactly the information I want, as a result of following the exact workflow that readers are likely to follow.

I’m refactoring this test because when it fails there’s a lot of distracting output. There are also some blocks that would be nice to tuck away and focus on in isolation, such as handling the server process on different OSes.

Here’s the refactored test function:

def test_django_project(request, tmp_path, python_cmd):

"""Test the Learning Log project."""

# Copy project to temp dir.

dest_dir = tmp_path / "learning_log"

copy_to_temp_dir(dest_dir)

# All remaining work needs to be done in dest_dir.

os.chdir(dest_dir)

# Process --django-version CLI arg.

modify_requirements(request, dest_dir)

# Build a fresh venv for the project.

llenv_python_cmd = build_venv(python_cmd, dest_dir)

migrate_project(llenv_python_cmd)

check_project(llenv_python_cmd)

run_e2e_tests(dest_dir, llenv_python_cmd)

# Show what versions of Python and Django were used.

show_versions(llenv_python_cmd)This test function is now refactored into a series of helper functions that set up the project, and then run the e2e tests. Test functions aren’t normally factored to this degree, because when tests fail it’s often helpful to have as much context as possible all in one place. When you’re diagnosing a failed test it’s often easier to look through longer chunks of procedural code to see what went wrong, rather than having to go visit a number of helper functions and remind yourself of the overall context.

In this case, however, the test function is long and complex enough that it’s more maintainable after being broken up into this set of helper functions.

Here’s the function run_e2e_tests(), with comments removed:

def run_e2e_tests(dest_dir, llenv_python_cmd):

log_path = dest_dir / "runserver_log.txt"

server_process = start_server(llenv_python_cmd, log_path)

check_server_ready(log_path)

try:

e2e_test("http://localhost:8008/")

except AssertionError as e:

raise e

finally:

stop_server(server_process)What we’re most interested in here is the stop_server() function, because that’s what’s different on Windows.

Here’s stop_server():

def stop_server(server_process):

if platform.system() == "Windows":

stop_server_win(server_process)

else:

...If we’re on Windows, we call a special stop_server_win() function.

Here’s that code:

def stop_server_win(server_process):

"""Stop server processes on Windows.

Get the main process, then all children and grandchildren,

and terminate all processes.

See running processes: > tasklist

See info about specific process: > tasklist /fi "pid eq <pid>"

Kill task: > taskkill /PID <pid> /F

"""

main_proc = psutil.Process(server_process.pid)

child_procs = main_proc.children(recursive=True)

for proc in child_procs:

proc.terminate()

main_proc.terminate()On Windows, we use the psutil library to get all the child processes that have been spawned from server_process. We then terminate each of those processes, and finally terminate the main process. Note that if you’re running this code, you’ll need to install psutil with pip, and update the requirements.txt file.

When tests end up with critical platform-specific code, refactoring to the point where that code is in its own function can be quite helpful.

As I continue to use this test suite, I’ll probably test whether this approach can be used on macOS as well. If it can, I’ll probably pull out all the platform-specific work here and just use the psutil approach on all systems. I’m in no rush to do that, however, because I’ve run the existing code on macOS many times already, and I don’t want to lose my confidence in that code quite yet.

Conclusions

Setting this test up to run reliably on both macOS and Windows was a lot of work, but it makes maintaining this project much easier every time a new version of Python or Django comes out. It also makes it easier to support readers who run into issues with the project. I can run this test against the version of Django the reader is using, go to the temp directory that was generated, and have a tested version of the project that matches what the reader should have.

It’s worth repeating that a test suite doesn’t have to just verify that code is working correctly against specific versions of a project’s dependencies. It can also generate artifacts that help you understand your project’s behavior in a variety of situations. Make sure your test suite meets your overall testing needs, rather than just generating a pass/ fail against a specific set of library versions.

Resources

You can find the code files from this post in the mp_testing_pcc_3e GitHub repository.

When Python Crash Course first came out in 2015, there was less consistency in the names people used for virtual environments. I had a convention at the time of using my project’s initials, followed by _env. So for a project called Learning Log, that was ll_env. I kept using it because a lot of people aren’t sure how to find hidden files and directories, like .venv, when they’re first learning to manage a virtual environment.

These days there’s a much stronger convention to call virtual environments .venv, so I’ll adopt that convention if Python Crash Course makes it to a fourth edition. ↩

If you haven’t heard of stdout and stderr before, they refer to the kinds of output you typically see in a terminal environment. Most CLI-based programs write informational and non-error output to stdout, and error-based output to stderr. One thing this allows is for error-related output to be presented in a different color. It also allows an output stream to be directed to different kinds of log files. ↩