Testing a book's code, part 2: Basic scripts

MP #77: Using parametrization to efficiently write a large batch of tests.

Note: This is the second post in a seven-part series about testing.

In the previous post, I laid out an overall plan for testing all the code in the book. In this post, we’ll put that plan into action. We’ll make a fork of the book’s repository, and build a test suite in the new repository. We’ll do this in a way that allows us to pull in changes from the original repository, without any conflicts with the testing code.

We’ll also use parametrization to write a set of tests that cover all the basic scripts in the first half of the book. These tests will run each script that should be tested, and make sure the output is what we expect it to be.

You can follow along and run the code yourself, if you’re interested in doing so.

Fork the original repository

Making a private fork of a public repository is not as straightforward as it sounds. GitHub doesn’t automate the process. I believe that’s partially for technical reasons, and partially to encourage public forks. The best resource I found was this gist, and this official post about forking.

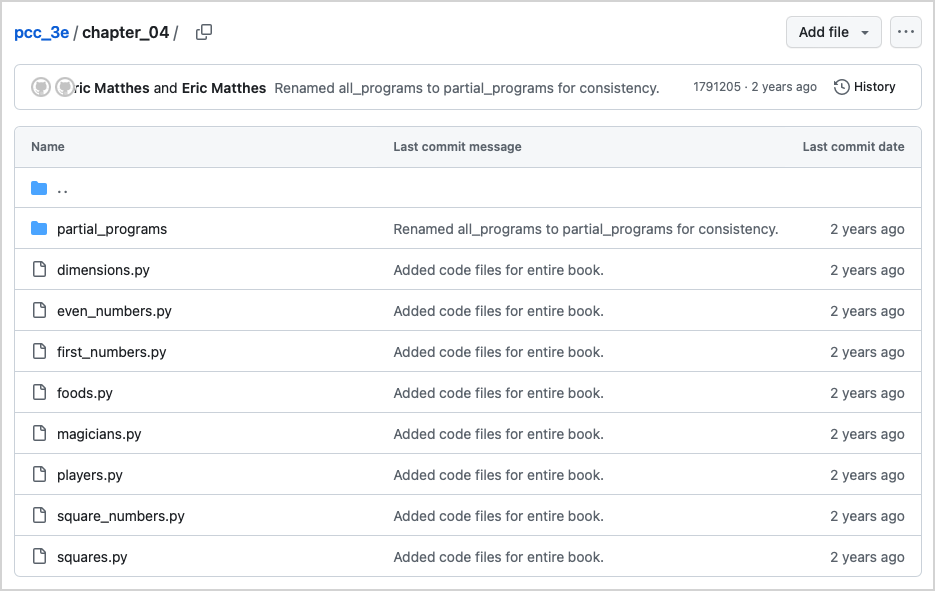

I’m going to set up a public fork for this post, called mp_testing_pcc_3e. You can’t make a direct fork of your own repository; I ended up cloning the original pcc_3e repo, and pushing it to a new repo. If you want to follow along, you can simply make a fork of the original pcc_3e repository.

Testing basic programs

We’ll start by testing the basic programs in the book. These are programs that don’t use any third party libraries. They don’t write or modify any files, and they don’t accept user input. All we need to do is run them, and validate the output.

We’ll start by testing the first program in the book, hello_world.py. Just as a typical Hello World program demonstrates that your environment is set up correctly, this first test will show that our testing infrastructure is set up correctly and that a simple test can pass.1

Make a virtual environment, activate it, and install pytest:

$ python -m venv .venv

$ source .venv/bin/activate

(.venv)$ pip install --upgrade pip

...

Successfully installed pip-23.3.2

(.venv)$ pip install pytest

...

Successfully installed ... pytest-7.4.4Make a tests/ directory, and in that directory make a new file called test_basic_programs.py. Here’s the first test:

import subprocess, sys

from pathlib import Path

from shlex import split

def test_basic_program():

"""Test a program that only prints output."""

root_dir = Path(__file__).parents[1]

path = root_dir / "chapter_01" / "hello_world.py"

# Use the venv python.

python_cmd = f"{sys.prefix}/bin/python"

cmd = f"{python_cmd} {path}"

# Run the command, and make assertions.

cmd_parts = split(cmd)

result = subprocess.run(cmd_parts,

capture_output=True, text=True, check=True)

output = result.stdout.strip()

assert output == "Hello Python world!"This isn’t the friendliest first test to see if you’re new to testing, but it does address the issue of many testing tutorials being overly simplistic. You don’t have to understand everything you see in this test function. Feel free to skim the following explanation of the test function, and then focus on making sense of everything that follows.

The test function has a generic name, because I’m planning to use this one function to run tests for all the basic programs. To build a path to hello_world.py we first look at the location of the current file, and then get the second element of the parents attribute. This should be the root directly of the overall repository. We can then define the path to hello_world.py.

To run hello_world.py for testing purposes, we want to use the Python interpreter from the active virtual environment. This will allow us to define a number of virtual environments, each with a different version of Python, and use any one of those to run tests. The command shown here doesn’t work on Windows, but we’ll fix that shortly.

The command we want to test is this one:

$ python hello_world.pyThat command is built from the string f”{python_cmd} {path}”. We need to split this command into its parts before calling it with subprocess.run(). Finally, we assert that the output is what we expect it to be.

This test passes:

(.venv)$ pytest tests/test_basic_programs.py -q

. [100%]

1 passed in 0.01sIt’s really good to make sure your first test passes before writing more tests. This shows that the first test passes, but also that the testing infrastructure is set up correctly. Note that I’m using the -q flag here to save space; if you’re following along it’s more informative to omit that flag.

Also note that calling pytest without any arguments will fail at this point. If you try it, pytest will discover the example tests included in the book, and attempt to run them. We’ll address that issue in a moment.

Parametrizing tests

I know I want to run this same kind of test a bunch of times, for a bunch of different programs. If this first test runs using parametrization, we can add as many tests as we want, without modifying the actual test function.

Here’s the first test, parametrized:

import subprocess, sys

...

import pytest

basic_programs = [

("chapter_01/hello_world.py", "Hello Python world!"),

]

@pytest.mark.parametrize(

"file_path, expected_output", basic_programs)

def test_basic_program(file_path, expected_output):

"""Test a program that only prints output."""

root_dir = Path(__file__).parents[1]

path = root_dir / file_path

# Use the venv python.

...

# Run the command, and make assertions.

...

assert output == expected_outputTo parametrize tests we make a list that stores the data that should be passed to the test function. In this case, I’m calling that list basic_programs. Each entry is a tuple containing the path to the file that needs to be tested, and the output that’s expected when that file is run.

The @pytest.mark.parametrize() decorator feeds data into a test function. You provide a name (or names) for the data, and a source of the data. Here we’re passing in the list basic_programs. pytest will feed one item at a time from that list into the test function. Right now basic_programs consists of one tuple, containing a file path and an output string. The items in this tuple are unpacked to the two names we provided: file_path, and expected_output. In this example, file_path will be the path to hello_world.py, and expected_output will be the string Hello Python world!

Inside the function, we only need to do two things: replace the hardcoded path to hello_world.py with the variable file_path, and replace the hardcoded output “Hello Python world!” with the variable expected_output. This test passes, with the same output as shown previously.

This can seem like a lot of complexity if you haven’t used parametrization before. But you’re about to see how much simpler it is to add more tests with this approach.

Can a test fail?

Before writing more tests, it’s a good idea to make sure your first test fails when you expect it to. I’m going to change the value of expected_output, and make sure the test fails:

basic_programs = [

("chapter_01/hello_world.py", "Goodbye Python world!"),

]I changed Hello to Goodbye, ran the test, and it failed. That’s a good thing! Sometimes a test isn’t really running the way you think it is, and a change like this will still pass. If that happens, it’s much easier to troubleshoot now than later.

Make sure you undo the change that caused the failure, and run your test one more time to make sure it passes again. It’s so easy to introduce a bug that’s difficult to sort out if you don’t run these quick checks while building a test suite.2

Testing more basic programs

Now we get to see the benefit of parametrization. We have a test that should work for any basic Python program that simply prints output, as long as we give it the path to the program and the expected output. Let’s start by adding two more tests, and make sure they run (and pass):

basic_programs = [

# Chapter 1

('chapter_01/hello_world.py', 'Hello Python world!'),

# Chapter 2

('chapter_02/apostrophe.py', "One of...community."),

('chapter_02/comment.py', "Hello Python people!"),

]These three tests pass:

$ pytest tests/test_basic_programs.py -q

... [100%]

3 passed in 0.03sThat’s very satisfying to see; we’ve tripled the number of tests, without adding any new test functions!

Testing all basic programs

At this point, creating more tests is as simple as adding new entries to the list basic_programs. I opened a terminal, cd’d into each chapter directory, and ran the programs I wanted to test.

I use GPT almost every day now, and this was a perfect example of why. Many of the basic programs in the book generate multiple lines of code. For programs like this, I wanted the multiline output compressed to single lines. For example this output:

Hello Python world!

Hello Python Crash Course world!should be converted to:

Hello Python world!\nHello Python Crash Course world!For two lines this isn’t a big deal, but for many programs with 5-10 lines that gets tedious. That’s the kind of thing AI assistants are great at, so I gave GPT a prompt telling it I’d be feeding in multiple lines, and asked it to convert those lines to the equivalent single-line Python string. I could write a program to do this, but it was much easier to just feed the output into GPT. It didn’t take too long to have tests for all 47 basic programs in the first half of the book:

(.venv)$ pytest tests/test_basic_programs.py -q

............................................... [100%]

47 passed in 0.49sWe’ve only written one test function, but we’ve got 47 tests now! This is a pretty good start. Before writing more test functions, let’s pull anything out of the existing test function that’s going to be used in other tests.

Getting python_cmd from a fixture

Almost every test is going to use the value for python_cmd that’s set in test_basic_program(). Let’s pull that out into a fixture, so it can be used by any test that needs it.3

You can place pytest configuration files in any directory in your test suite. Like a lot of things in the testing world this can be confusing at first, but it lets you structure an increasingly complex test suite in an organized way.

Make a file called conftest.py, in the tests/ directory:

import sys

import pytest

@pytest.fixture(scope="session")

def python_cmd():

"""Return path to the venv Python interpreter."""

return f"{sys.prefix}/bin/python"Fixtures are designed to make it easier to set up and share resources that are needed by more than one test function. Fixtures can be created once per session, module, class, or function.

Here we define a function called python_cmd(), that’s run once for the entire test session. The return value is the path to the Python interpreter for the current active virtual environment. Any test function can use this value by including the name python_cmd in its list of arguments.

Here’s what test_basic_program() should look like now:

@pytest.mark.parametrize(...)

def test_basic_program(python_cmd, file_path, expected_output):

"""Test a program that only prints output."""

root_dir = Path(__file__).parents[1]

path = root_dir / file_path

# Run the command, and make assertions.

cmd = f"{python_cmd} {path}"

cmd_parts = split(cmd)

...The main change here is the addition of the python_cmd argument in the definition of test_basic_program(). When pytest finds an argument name that matches the name of a fixture function, it runs that function and passes the return value to the argument.

In this case pytest sees the python_cmd argument, and recognizes that there’s a fixture function in conftest.py with that same name. It runs that function and assigns the return value, which is the path to the virtual environment’s Python interpreter, to the parameter python_cmd. Note that since the fixture function has a session scope, pytest only runs the function once. It will pass the return value to any function that has python_cmd as one of its arguments, without running the fixture function again.

In the body of the function, we got rid of the code that defined python_cmd, and reorganized the other lines slightly. All the tests still pass.

Pulling out some utils

Let’s also start a utils file, to gather code that doesn’t need to be a fixture, but will be used by multiple tests.

Make a file in the tests/ directory called utils.py. The first thing we’ll put there is a function to run a command:

from shlex import split

import subprocess

def run_command(cmd):

"""Run a command, and return the output."""

cmd_parts = split(cmd)

result = subprocess.run(cmd_parts,

capture_output=True, text=True, check=True)

return result.stdout.strip()This function takes in a command, splits it into parts, and runs it by calling subprocess.run(). It returns a cleaned-up version of whatever was printed to stdout, which is where the output of print() calls is sent. This will make it easier to run a variety of programs , without having subprocess.run() calls in every test function.

Here are the changes to test_basic_programs.py:

...

import pytest

import utils

basic_programs = [

...

]

@pytest.mark.parametrize(...)

def test_basic_program(...):

"""Test a program that only prints output."""

root_dir = Path(__file__).parents[1]

path = root_dir / file_path

# Run the command, and make assertions.

cmd = f"{python_cmd} {path}"

output = utils.run_command(cmd)

assert output == expected_outputHere we’re importing the new utils module, and calling utils.run_command(). We’ve also removed the code that was replaced by the new utility function.

The tests all still pass, and we have a test function that’s much more readable than what we started with. More importantly, it will be easier to write more test functions that build on this one.

Ignoring example tests

I mentioned earlier that you can have multiple pytest configuration files, that control the behavior of different aspects of the test suite. We can simplify the command for running the test suite by ignoring the tests in the repository that are examples from the book.

In the root directory of the repository, make a new file called conftest.py. (Make sure you don’t overwrite the conftest.py file that’s already in the tests/ directory.)

In this new file, add the following:

"""Root pytest configuration file."""

# Ignore example tests from the book.

collect_ignore = [

"chapter_07",

"chapter_11",

"solution_files",

]When you have multiple conftest.py files, it’s helpful to add a module-level docstring that makes it clear which one you’re working with.

When you run pytest it scans your entire repository, looking for modules and functions associated with tests. This is called collecting tests. If pytest finds a list called collect_ignore in any conftest.py file, it will avoid collecting tests from the specified directories.

With this configuration file in place, we no longer need to tell pytest where the tests we want to run are. We can just use the bare pytest command:

(.venv)$ pytest -q

............................................... [100%]

47 passed in 0.58sThis is much nicer for running tests, and also less prone to typos.

Running on Windows

It’s a good time to make these tests work on Windows, because the only thing that should be different is the code that builds python_cmd. We should be able to add some conditional code to the python_cmd() fixture that returns the correct path on all systems. This is another benefit of having fixtures and utility functions; as the test suite grows in complexity, that complexity doesn’t overwhelm the overall structure of the test suite.

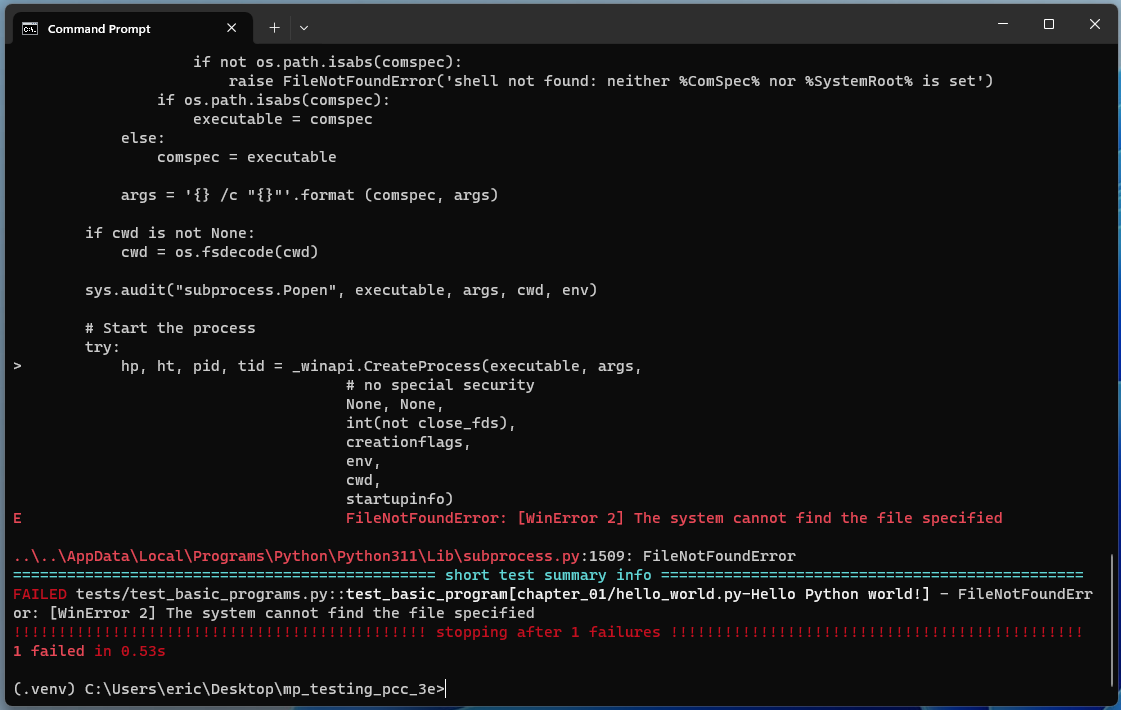

I’m approaching this by starting a Windows VM, cloning the test repository, and running the tests as they’re currently written. I’m expecting them to fail, and that’s exactly what happens:

(.venv)> pytest -q

FFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFF

47 failed in 4.64sWhen you have a bunch of failures like this, it’s helpful to use the -x flag when calling pytest. This makes pytest stop after the first failed test, so you can focus on fixing one failure at a time. When most or all of the tests fail, fixing one failure often clears up most or all of the other failures.

pytest -qx on Windows. The tests failed, as expected. If you’re not sure how to approach this, pasting this entire error message into GPT probably won’t fix everything, but it should point you in the right direction.Looking through the failure output closely, which I won’t reproduce here, shows that the issue is coming from the run_command() function. But the root issue is actually in the python_cmd() fixture function.

Virtual environments are structured slightly differently on Windows than on macOS and Linux. Instead of bin/python, the path to the Python interpreter on Windows is Scripts/python.exe.

Here’s the update to python_cmd(), in tests/conftest.py:

import sys

from pathlib import Path

import pytest

@pytest.fixture(scope="session")

def python_cmd():

"""Return the path to the venv Python interpreter."""

if sys.platform == "win32":

python_cmd = Path(sys.prefix) / "Scripts/python.exe"

else:

python_cmd = Path(sys.prefix) / "bin/python"

return python_cmd.as_posix()Strings don’t work well as paths across different OSes, which is why pathlib was developed. The as_posix() method returns a string that can be interpreted correctly on all systems in the way we’re using it, particularly in subprocess.run() calls. Here we define python_cmd as a path to the interpreter on each OS, and then return the as_posix() version of the appropriate path.

There’s a similar change that needs to be made to test_basic_program() as well:

@pytest.mark.parametrize(...)

def test_basic_program(...):

...

# Run the command, and make assertions.

cmd = f"{python_cmd} {path.as_posix()}"

output = utils.run_command(cmd)

assert output == expected_outputWhen we write commands, we need to use the posix version of paths. We’ve already done that for python_cmd, but we need to do that for the path to the file we’re running as well.

With these changes all 47 tests still pass on macOS, and now they pass on Windows as well. I don’t have any reason to think they won’t work on Linux at this point, although I haven’t tested that yet. It’s also worth noting that GPT is really helpful for sorting out cross-OS compatibility issues. You can run your code on a different OS, give GPT the entire error message, and ask it for suggestions. It doesn’t fix everything, but it frequently points out a promising direction for what to focus on.

Programs that need to be run from a specific directory

Some of the basic programs need to be run from a specific directory. These are all programs that read from a file, and use relative imports.

I added a new list of programs to test along with the corresponding output, and made a second test function that’s almost identical to test_basic_program():

import subprocess, sys, os

...

...

# Programs that must be run from their parent directory.

chdir_programs = [

("chapter_10/.../file_reader.py", "3.14..79"),

...

]

@pytest.mark.parametrize("file_path, expected_output", basic_programs)

def test_basic_program(python_cmd, file_path, expected_output):

"""Test a program that only prints output."""

...

@pytest.mark.parametrize("file_path, expected_output", chdir_programs)

def test_chdir_program(python_cmd, file_path, expected_output):

"""Test a program that must be run from the parent directory."""

root_dir = Path(__file__).parents[1]

path = root_dir / file_path

# Change to the parent directory before running command.

os.chdir(path.parent)

# Run the command, and make assertions.

cmd = f"{python_cmd} {path.as_posix()}"

output = utils.run_command(cmd)

assert output == expected_outputI called the new list chdir_programs, because these are basic programs where we must change directories before running them.

The new function is called test_chdir_program(), and the only difference between it and test_basic_program() is the call to os.chdir(). We change to the directory containing the file that’s being tested before running it, so the relative file paths work correctly.

At this point, we have 60 passing tests:

$ pytest -q

............................................................ [100%]

60 passed in 0.87sWhen I first wrote the test suite, I placed some programs that had import statements in this group. It turns out those work without calling os.chdir(), so I added those programs to the set of basic tests in this commit.

You might have noticed the significant overlap between these two test functions. It’s not unusual to have more repetitive code in test suites. I know I won’t be adding more test functions to this file, so I’m going to leave these two test functions as they are, without any further refactoring. I also know the codebase isn’t going to grow beyond what’s already in the book, so there’s no reason to think any more tests will be added to this file. Don’t refactor blindly; if you have clear reasons to think you’ve reached a point where a particular body of code is good enough, be willing to call it good enough and move on to other things.

Conclusions

There are a number of takeaways from this first phase of building out the test suite:

- Start by writing a single test, which helps to make sure your testing infrastructure is working. Make sure your first test passes, but also make sure it’s capable of failing.

- Learn to parametrize your tests. It can seem complex if you haven’t done it before, but with a little work you can go from tens or hundreds of individual tests, to one or two tests that are used repeatedly.

- Pull common setup tasks out into fixtures.

- Pull common non-setup tasks out into utility functions.

If you address some or all of these points, you’ll probably have a test suite that makes it easier to carry out ongoing development and maintenance work. You’ll also have a test suite that’s easier to understand, and easier to build on as your project evolves.

In the next post we’ll make sure we can run the test suite using multiple versions of Python. We’ll also write tests for the first project, a 2d game called Alien Invasion that uses Pygame. Even if you’re not interested in games, it’s an interesting challenge to write automated tests for a project that seems to require user interactions. There are plenty of takeaways from this work that applies to a wide variety of projects as well.

Resources

You can find the code files from this post in the mp_testing_pcc_3e GitHub repository.

A lot of people seem to think that Hello World programs are written just so everyone, even people brand new to programming, can understand them. Most of the time, they serve a much more significant purpose: they demonstrate that a programming language, and some relevant tools, are set up correctly on a system.

If you can run a Hello World program, you can probably run the programs you’re interested in. If you can’t get a Hello World program to run, you won’t have any luck with more complex programs until you sort out whatever issue is keeping Hello World from running. ↩

Brandon Rhodes gave an excellent keynote at PyTexas 2023 titled Walking the Line. At one point he mentions the giddy but uneasy feeling we get when our tests start to pass consistently, while doing a bunch of refactoring work. Are we really that good, or are our tests not quite doing what we think they’re doing?

Making sure your tests can fail is a really important step in a number of different situations. If you haven’t seen a failing test in a while, consider introducing an intentional bug to make sure your tests can still fail, in the way you expect them to. ↩

If you haven’t heard this term before or you’re unclear about exactly what it is, a fixture is a resource that’s used by multiple test functions. It’s typically implemented as a function that returns a consistent resource or set of resources for every test function that needs it. ↩