Testing a book's code: Overall conclusions

MP 84: Final takeaways from a detailed look at testing.

Note: This is the final post in a series about testing. This concluding post is free to everyone, and the earlier posts in the series will be unlocked over the coming weeks. Thank you to everyone who supports my ongoing work on Mostly Python.

I learned about testing fairly late in my career as a programmer. It wasn’t introduced in any of the classes I took when I was younger, and the intro and intermediate books I read over the years didn’t mention it in any prominent way. As soon as I learned the basics of testing, I wished I had learned about it earlier.

Testing is often thought of as something separate from development, but it doesn’t have to be. Testing a project effectively is an interesting challenge, and often pushes you to understand your project in new and meaningful ways.

I’ve had the good fortune to get to maintain a comprehensive introductory Python book for almost a decade now. That’s a time period where building out a thorough test suite is worth the effort. The challenges that came up in this work highlight some aspects of testing that don’t necessarily come up when developing a test suite for a more traditional software project. In this final post I’ll highlight some of these takeaways.

General takeaways

There are a number of big-picture takeaways from this work:

Think about your testing constraints

When you’re in charge of writing a test suite, you end up looking at your project in a different way. Instead of thinking about the people who use your project, you have to write a program that uses your project. If your project wasn’t designed to be used in a fully automated way, you’ll have to find a way to exercise the code from within the test suite. Sometimes you can change the actual project to make it easier to test, but sometimes there are very good reasons to keep the project as is, and come up with a test suite that handles the current structure of the project.

Code for a book is a perfect example of this kind of situation. When someone writes code for a book, they tend to prioritize simple examples that highlight the topic that’s being taught. Often times this means leaving out some elements that we’d include in a real-world project, which would distract people from learning about a specific topic.

In a more traditional project, you might have to test code that’s already being used in critical ways, or by a significant user base. It might be necessary to test the code as is, before making any changes just for the purposes of testing. Learning to recognize the constraints you’re working with is an important skill in testing.

Think about possibilities

All that said, you can be quite creative in developing your test suite. As an author, I can’t change any of the code I’m testing. But I can copy that code to a new repository or a temp directory, and do anything I want with it from that point forward.

In many testing tutorials and references, we work with code that generates text output. It’s often somewhat straightforward to verify whether text output is correct. However, most real-world output is more varied than that. If you’re working with text output, maybe there’s some elements of the text that’s always changing, such as timestamps. Maybe you’re working with image output, or HTML, or even sound files. It’s not always obvious how to verify that non-text output is “correct”.

I think a lot of testing resources talk about assertions where all output is either correct or incorrect. But I’ve run into many situations, especially when testing cross-platform code, where this is the actual question that needs to be asked:

Is this output close enough to what it’s supposed to be?

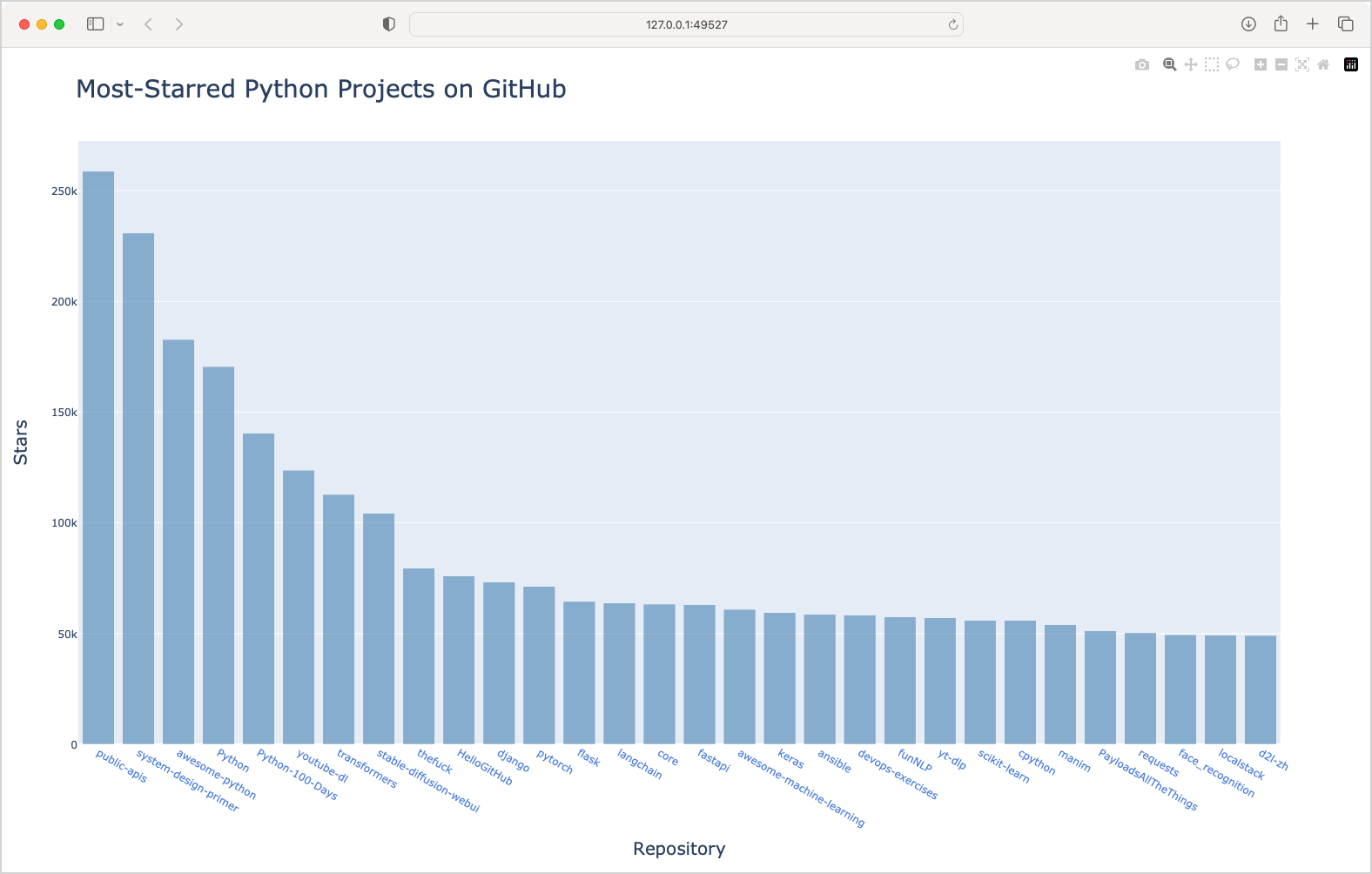

It’s not particularly difficult to write assertions that check whether output is “good enough”, but I don’t see it covered all that often. For example when testing output in the form of a plot image, it was satisfying to come up with an approach that looks at the average color values of the entire plot, rather than trying to make assertions about the actual data represented in the plot. I have no idea if this is the best approach to testing graphical output that can vary over time, but it’s certainly good enough for my purposes at this point.

Think about dependencies

The standard view of testing is that we should test against all versions of the language that the project currently supports, and multiple versions of its main dependencies. But this dependency matrix can grow quite large. Although there are tools that help you manage testing against that entire matrix, you don’t always need to test against the entire matrix.

In the context of testing code from a book, I’m primarily interested in testing against release candidates of new versions of Python, and major libraries such as Pygame, Matplotlib, Plotly, and Django. I don’t need to test against a full matrix of different versions of these libraries; instead I need to be able to name the specific version that I want to test against during the current test run.

I’m working on a deployment-focused project that probably doesn’t need to maintain backwards compatibility as carefully as many other projects. This project should only be run once against any given target project. It shouldn’t be an issue for someone to just install the latest version and then carry out their deployment. The test suite for that project is being developed with a focus on testing the current version of the library, and probably won’t do extensive testing against previously-released versions.

Think about test artifacts

This point deserves a post, or even a series, of its own. A test suite runs your project in various ways. As such, it can create any artifacts needed for automated testing, and any artifacts that you might want to open and interact with manually.

For example, it might be possible to write a plot image to memory instead of to a file, and avoid the overhead of reading and writing files during a test suite. But I sometimes want to go to the temp directory used by the test suite, and open up plot images to look at them. Even if tests pass, I might want to see what the plot looks like under current testing conditions. If a test fails I might want to look at the image that was generated during the test run, instead of having to run the project manually as part of my debugging work. I’ll say this over and over again, a good test suite can act as a development tool, not just a verification tool.

I’ll say this over and over again, a good test suite can act as a development tool, not just a verification tool.

Think about goals

For people who haven’t done a lot of testing work, the perception can be that testing is entirely about the binary question “Does this code work correctly or not?” But a well-developed test suite should answer a specific set of questions that you have, which meet your goals in developing and maintaining the project:

What parts of this project work as expected?

What parts are starting to work differently than expected?

If part of this project is behaving differently than it used to, what is that difference?

For example if a page in a web project fails to load, is the error on that particular page, or was there an error in a different part of the project that indirectly affects other pages? When this kind of bug occurs, will your test suite lead you efficiently to the root cause, or will it focus on the symptom of the underlying problem? I haven’t always known that a test would help me in this way, but I’ve certainly seen some testing approaches generate more helpful information than others.

There’s a tendency to think that tests should run as fast as possible. But we’re actually after a balance between speed, test coverage, and information generated. If you focus exclusively on speed, you might miss out on generating some information and artifacts that can be really helpful in debugging and development work. If you generate too much information, you might make it difficult to use the output of your test suite.

Make sure your tests can fail

Sometimes we focus so much on the desired outcome of tests passing, that we forget to make sure a test can fail. If you haven’t seen a failure in a while, consider introducing an intentional bug to see if the expected failures appear.

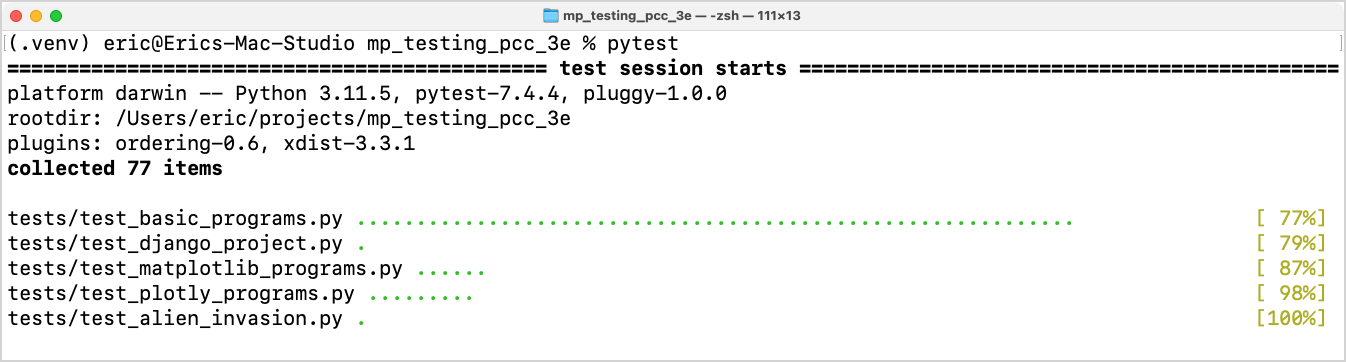

pytest-specific takeaways

pytest is a fantastic library. It’s one of those amazing libraries that’s simple enough in its most basic usage that people entirely new to testing should start using it. At the same time, it’s flexible and powerful enough that it’s perfectly appropriate to use in large, fully-deployed commercial projects.

You can get started with pytest from a simple tutorial. But there are lots of specific things to learn about it that will help you write exactly the kind of test suite that suits your needs. Also, there are a number of ways to run your tests, so you can use them in exactly the way you need at any given time.

Use parametrization

At first glance, parametrization seems like a way to write less code. But that’s just one benefit. The real power of parametrization comes from developing a highly consistent way to run a series of similar tests. In the context of this test suite, two test functions resulted in 60 tests being run.

Once you’re familiar with the concept and syntax of parametrization, it lets you generate a large number of tests by writing a small number of test functions. While appearing more complex to people unfamiliar with parametrization, it’s actually a much more maintainable way to develop a growing test suite.

Use fixtures

Fixtures are used to carry out the setup work required for test functions. Fixtures can do something, such as building a virtual environment for a set of tests. They can also return resources needed for tests. By defining a fixture’s scope, it can be run once for the entire test session. Or, it can be run for specific modules, classes, or functions.

As with many features of pytest, you can start out by writing a simple fixture that carries out a small task or creates a small resource for several test functions. As your experience and understanding grows, you can use fixtures in increasingly complex and powerful ways.

Use parallel test execution

By installing the pytest-xdist package, you can run many kinds of tests in parallel. If your tests are starting to take a noticeable amount of time to run, see if a simple call to pytest -n auto speeds up your test run.

Parallel tests don’t always work well if your tests access external resources, or have complex setup steps. But you can install pytest-xdist and run a subset of your tests in parallel, and run tests that don’t work well in parallel separately.

Use custom CLI args to enhance your test suite

In this series I used a set of custom CLI args to specify exactly which version of a library to use during the test run. This is particularly useful when testing against release candidates of third-party libraries.

You can use CLI args in all kinds of ways. For example, imagine your test suite generates a large number of image files and then compares those against a set of reference images. An update to a library you use changes the output images slightly, but in an acceptable way. You could go into your test’s temp directory, check the images, and then manually copy them over to your reference_files/ directory. But if you do this on a regular basis, you could write a CLI arg that lets you run a command like this:

$ pytest tests/test_image_output.py —-update-reference-filesYou could then write code that copies each newly-generated image file to reference_files/ when this flag is included.

Learn to use pytest’s built-in CLI args

pytest has a significant number of CLI args that you can use to run exactly the tests you want, generating exactly the kind of output you want on any given run. For example, here are a few of my most-used arguments:

- The

-kargument lets you specify tests matching a specific pattern. This can include test function names or parts of names, and parameters as well. - The

-xargument stops at the first test failure. - The

—-lfargument re-runs the last test that failed. - The

-sflag tells pytest to show the output that was captured during test execution. - I recently learned about the

—-setup-planargument, which shows exactly what pytest will run, in what order. This doesn’t just show what order the tests will be run in. It also shows when each fixture will be called, and how often they’ll be called. You can see how it runs fixtures before test functions, and how it uses parametrization to repeat tests with different parameters. It’s an amazingly helpful tool for understanding more complex aspects of pytest.

Understand the role of multiple conftest.py files

You can write a conftest.py file in any directory within your test suite, including your project’s root directory. This lets you structure your test suite efficiently. For example if a fixture is used by multiple modules, you can write that fixture once in a conftest.py file in the directory containing those modules. You don’t need a copy of the fixture in every test module.

Some people don’t like seeing multiple conftest.py files, because it can be less clear about where fixtures used in a test function are coming from. A good principle to minimize confusion is to place fixtures as close as possible to the tests that use them. For example if you have tests in a subdirectory, put the fixtures that are only used by those tests in that directory’s conftest.py. Don’t dump all of your fixtures into your root directory’s conftest.py file.

Minor takeaways

If you’ve made it this far, you must be interested in testing! Here’s a few more smaller things that are worth mentioning:

Consider making assertions about your tests

At the heart of every test is an assertion about your project’s behavior or output. But if there’s any complexity to your test suite’s setup, consider writing assertions about that setup work itself. This is especially important to consider if there’s a chance the setup work might not be completed correctly, in a way that would still allow the tests to pass. Or, if a step in the setup work fails you might want to just exit the test suite and deal with that setup failure.

Consider modifying a copy of your project’s code

Some projects are more “testable” than others. If your code seems untestable as currently written, you should probably restructure your code so it’s easier to test the overall project and its component parts. But if you can’t modify your code for some reason, consider making a copy of all or part of the project, modifying the copy, and then making assertions against the modified code. For example you might modify your code to write output to a file instead of displaying it on the screen. Then you can make assertions about the contents of that file.

Consider testing a subset of your project’s codebase

Chasing “100% test coverage” can be an exercise in over-optimization. There may well be other things that are more worth pursuing than complete test coverage of your codebase. Identify the most critical aspects of your project, and make sure to test those behaviors and sections. Also, if some sections of code are repetitive and a successful test implies correct behavior for the repeated parts, ask yourself if it’s reasonable to only test one or several of the repeated behaviors.

Don’t refactor just to refactor

Most of us are taught to refactor code that appears multiple times in a project. In a test suite, that’s much less important. Repetition is okay in testing, because it’s often helpful to have enough context in a test function to assess a test failure without having to trace through a complex hierarchy of code. Refactor your tests as needed to understand and maintain the evolving test suite, but don’t refactor just because you see repetition in your tests.

Use a seed to test random code

If your project uses randomness at all, consider using a random seed to get repeatable pseudorandom output.

Be wary of metadata, and other minor file differences

If you’re comparing newly-generated files against a set of reference files, be wary of metadata. In several projects now, I’ve spent a lot of time trying to figure out why two seemingly identical files fail a comparison test. Sometimes it’s a piece of metadata generated by a library used in generating the output file. Sometimes it’s a minor difference in how output is written on different operating systems. If files appear identical but fail a comparison test, figure out how to view the metadata on your system and take a look at it for your test file and your reference file.

Write your tests on one OS, and then generalize to others early

If your test suite will need to pass on multiple operating systems, write a small number of tests that work on your main OS. Before expanding your suite too significantly, run those tests on the other OSes you need to support, and figure out how to make them pass there as well. You can save yourself some refactoring work and some complexity by discovering cross-platform issues early on. You might learn more about how your project and its dependencies work on different OSes as well.

Conclusions

There are a lot of tutorials out there about testing example projects. But testing a real-world project often brings up challenges that don’t come up in clean sample projects. Most people reading this series probably aren't writing technical books, but the issues that arose in developing this suite are comparable to the issues that people face when developing a test suite for a real-world project.

I love testing, because it helps me sleep better at night. If I’ve tested my code against a release candidate of a library, I don’t worry about a slew of bug reports when that new version comes out. If I have to put a project aside for a while, it’s easier to pick up work on that project months later, or even years later, if it has at least a basic test suite. When resuming work on the project, I can run the tests and see if anything has broken for any reason in that project’s down time.

I also love hearing people’s testing stories, and hearing the questions people have about testing. If you have stories about test suites that saved you at some point, or tests that were frustrating in some way, please share it. If you have questions about how to approach some aspect of testing, I’d love to hear that as well. If your question is better asked privately than in comments, feel free to reply to this email. I can’t promise to have an answer, but I’ll be happy to share any thoughts I have that might help.