It's your job to keep AI at arm's length

MP 111: Don't throw away hard-earned trust in a rush to embrace AI.

The AI hype cycle is continuing to run its course, and will likely do so for some time to come. Hype around things like NFTs tend to peak and then fade away quickly, because there's no actual (legitimate) use case for NFTs. There's plenty of hype around the current generation of AI developments and tools, but there are also many appropriate uses of this technology.

When a technology has lots of hype and lots of meaningful applications, it takes ongoing work to sort out appropriate boundaries for how it should be used. To help identify those boundaries, I look for specific cases that illustrate core issues.

A recent AI-related issue didn't seem to get as much attention as it deserved. A hiker in Wyoming fell to their death on Teewinot, a mountain in the Teton range. The American Alpine Club, which has a long history of analyzing accidents in the mountains, pointed to the use of AI summaries of mountaineering route information as one possible root cause of this incident, and other recent incidents as well.

The incident

Teewinot is a 12,330-foot mountain in Grand Teton National Park, in Wyoming. Because it's lower in elevation than the Grand Teton (13,775 feet), Teewinot is sometimes perceived to be an easier mountain than the Grand by people who aren't experienced mountaineers. That perception isn't accurate at all. Teewinot is one of the deadliest mountains in the area. It's easy to get off route, and once you're off route it's easy to take a long fall with serious consequences if you aren't using appropriate rock climbing gear and techniques.

On August 10, 2023, a group of nine climbers attempted Teewinot. One of the climbers suffered a fatal fall just before reaching the summit. A team of rescuers assisted the rest of the group overnight, and they were evacuated by helicopter the next morning.

The role of AI in this specific incident

The American Alpine Club publishes accounts of significant incidents in various mountain ranges, with the goal of helping people identify root causes, and avoid similar accidents in the future. In a recent article, AAC editor Pete Takeda discussed this incident along with another rescue on Teewinot where inexperienced people ended up stranded in steep terrain. It's worth noting that Takeda has decades of climbing experience, and has been writing about mountaineering throughout his career.

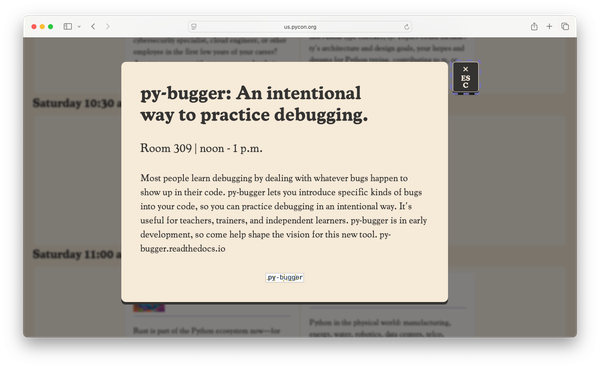

The main point of the article was that both parties had consulted web sites that were largely aimed at hiking communities, rather than climbing communities. One site in particular, AllTrails, had recently started showing AI summaries of specific mountains. On the Teewinot page, the main route was described as a trail, not a climb. This was quite different than many of the comments that experienced people had left. The human-generated comments made specific reference to the need for climbing gear and experience, and emphasized that this was not just a hiking trail.

While the AI-generated summary offered some cautions about the difficulty of the route, it was not specific in the way a climber with relevant experience would write. Here's how the AAC editor described the issue:

The most disturbing representation was on AllTrails.com. On the page for Teewinot, the climb was referred to as a “trail” not once, but three times (See Fig 1.). The strongest warning given was to “proceed cautiously” on a “highly challenging” route that “should only be attempted by experienced adventurers.” In contrast, the Teewinot trail reviews posted by members revealed a different reality.

It really seems that anyone with a clear understanding of the strengths and limitations of AI tools would have anticipated this kind of issue. AI assistants are good at generating summaries that are then reviewed by people with relevant experience and expertise. AI assistants are not good at generating summaries for people without relevant experience, who will then make important decisions based on that information.

Keeping AI at arm's length

The terms "site administrator" and "site owner" carry interesting connotations these days. Originally, a site owner/administrator was just responsible for static content on a web site. These days, most "sites" are really interactive applications. A site owner/administrator isn't just responsible for static content anymore. Site owners and administrators are now responsible for a much more complex set of functionality on their sites.

I use the terms "owner" and "administrator" because people in each of these roles carry a responsibility to clearly understand both the intended and actual usage of the site. People in both of these roles have a responsibility to critically examine the ways that introducing AI tools may impact the actual usage of the site, and the decisions people are likely to make based on that usage.

Users know how to evaluate human-generated content

Users have decades of experience evaluating human-generated content. For example, most reasonable users of a site like AllTrails know how to evaluate a set of descriptions of a trail that other users have submitted. People know how to assess the accuracy of one description that differs significantly from most other descriptions. Sometimes that one description is more accurate than most others, because it was written by a user with relevant experience. Sometimes it's clear that the outlier was written by a user with little relevant experience, and their perspective can be largely ignored.

People also trust that a summary on a site like AllTrails is written by someone with at least some kind of qualification: maybe they're an experienced and trusted user, or maybe they're a paid staff member with relevant expertise.

People are inexperienced in evaluating AI-generated content

I recently took a three-day ferry trip from Alaska to Washington state, and then drove across the US to North Carolina. Before the trip, I was looking forward to conversations with strangers across the country about how people are using and reacting to AI tools. The entire trip was a stark reminder of how little most people care about AI outside the tech world, if they're even aware of it at all.

When an AI tool is let loose to generate content on a site, most people are not prepared to evaluate that content effectively. This is especially true for sites whose domains include many users without specific interests that would lead them to already have experience evaluating and understanding AI-generated output.

There are some domains where mistakes interpreting AI-generated content will have little meaningful impact on users' lives. For example if a bird-identification app misidentifies a bird you just saw, you probably won't get hurt or killed. But there are many domains where misinterpretation of content can have a significant and profound impact on peoples' lives. These are not just fun sites where users with similar interests gather anymore. Many sites that center around user-generated content have evolved into tools people use to make decisions that can have lasting impacts in their lives.

It's your job to maintain the integrity of your site

One of the most valuable assets that an established site can have is the trust of its users. It takes years to establish the kind of integrity that builds trust in individual users, and a community of users. That trust can disappear in a relative instant if owners and administrators neglect to think critically about how they've gained the trust of their users, what the intended and actual usage of the site is, and the ways AI tools may impact all of this.

Don't blindly buy into AI hype. Keep humans with domain expertise involved. Don't push out your expert humans in the misguided notion that you don't need them anymore. Consider how AI tools might help you in your work, but have a clear approach to keeping AI at arm's length as well.

Note: I first heard about this incident through Sarah Moir's article AI and a duty of care. If the points here resonate with you, I highly recommend her take as well.