How clean are your backups?

MP 100: And what about your archives?

Note: The Django from first principles series will continue next week.

Next month we're moving from our home on an island in southeast Alaska to a small town in western North Carolina. We're using this as an opportunity to purge all the little things that accumulate around you after a couple decades in one place. One of the things that tend to pile up is old USB sticks and external drives.

I've spent some time this past week copying anything meaningful from a small pile of external drives to the two larger drives I'll keep using. Cleaning out these older drives was way more interesting than I expected. Familiarity with the pathlib module made this work much more manageable than it might otherwise have been.

If you've got an archive that's grown over a period of years, and you've been avoiding the task of simplifying and organizing it, I think there are a few things from my recent experience that might be helpful to know about.

How many files?!

Most of the work copying files from small older drives to one of the two newer drives was going well. It was slow because these were older drives, but everything seemed to be copying over fine.

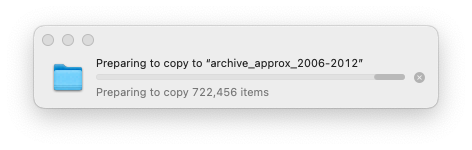

At one point I had a stack of four small USB drives left to copy. The first three went well. But the last drive, the oldest, did not seem to be going so well:

I connected the drive, selected everything on it, and dragged it over to my main backup drive. I saw Finder's "Preparing to copy..." popup, which is pretty typical to see when copying a bunch of files. But the number of files it was preparing to copy just kept growing and growing.

I assumed I had included a bunch of virtual environments in these backups, earlier in my career. 1 Virtual environments can have on the order of 10,000 files in them, so it wouldn't take many to generate the number of items I was seeing on this old drive.

I planned to let the copy run, and then delete any unneeded files from the main drive. I didn't want to try to modify the old external drive itself. For one thing I didn't want to delete the wrong files accidentally. But I also wanted to avoid messing around much with a drive that was close to 15 years old.

This initial attempt to copy the drive failed, so I just went through the drive and copied the directories I recognized as meaningful. There were a bunch of folders with names like projects/, archive/python_work/, and archive/other/projects_archive_from_12.04. I copied all these directories, but skipped over a bunch of old Linux system directories. I still assumed there were a number of nested virtual environments sprinkled throughout these older backups.

Exploring the archive

At one point I had all the directories that might contain something meaningful copied over to my main 8TB external drive. I still had the older drives, so I felt free to do some cleanup work on the newly-created archive before getting rid of the old drives.

I wrote a Python script to explore the archive. This script was really just a scratchpad, letting me explore and manipulate the archive as I did my cleanup work. It never reached a final state, I just modified it on a continual basis until I had finished cleaning up the archive.

Here's the first bit, just to find out how many files and directories are in the archive:

# Get external drive, make sure it exists. path_ext_drive = Path("/Volumes") / ... / "old_hdd_backups" assert path_ext_drive.exists() # --- all files and dirs --- # How many files and directories in the entire path? all_files_dirs = list(path_ext_drive.rglob("*")) print(f"all files dirs: {len(all_files_dirs):,}")

I want to emphasize that this is not clean code that anyone else should run. This is code I used during a single, once-a-decade deep dive into my own archive.

This code builds a path to the archive on my main external drive. Rather than use the generic name path, I gave it a very specific name, path_ext_drive. I really didn't want to accidentally delete that path at some later point.

The call to rglob("*") performs a recursive global search for all files and directories that match the pattern "*", which is a wildcard that matches everything. This should build a list containing the full path to every file and folder in the archive.

Knowing there were hundreds of thousands of files across all these drives, I had no idea how many files and directories I'd find, and whether pathlib would be efficient enough for this work. I unironically wondered if I'd need to rewrite this code in Rust. :)

To my surprise, it only took a second or two to generate the results:

$ python drive_analyzer.py all files dirs: 156,406

This wasn't too bad. I'm sure I had over a million files spread across the various old drives I was working with. My manual exclusion of unneeded system directories had left out a bunch of cruft already.

Still, 156k files and folders is probably more than I need. There must be some virtual environments, unneeded files, and duplicates in there. Let's take a closer look at what's actually in the archive.

Are there any virtual environments?

These days, almost everyone names their virtual environments .venv/. But 15 years ago, there was no clear convention. Back in those days I used names like tutorial_host_env and standards_project_venv.

I added a section to find these directories:

venv_dirs = list(path_ext_drive.rglob("*_env")) venv_dirs += list(path_ext_drive.rglob("*venv")) venv_dirs += list(path_ext_drive.rglob("*venv*")) for venv_dir in venv_dirs: print(venv_dir.as_posix())

To my surprise, I only found 3 virtual environment directories in the entire archive. Between reasonable backup strategies in the past and selective copying from old drives more recently, I hadn't actually copied many virtual environments into this latest archive.

I printed these directories, and looked at them in Finder to make sure they were actually virtual environments. I had no reason to think they wouldn't be, but once I deleted these directories they'd be gone for good. I wanted to be absolutely sure of what I was deleting.

Here's the code to delete the virtual environment directories that were found:

# Remove venv dirs. for venv_dir in venv_dirs: shutil.rmtree(venv_dir)

You can use path.rmdir() to delete empty directories. But if you want to delete a directory and all its contents, you need to use shutil.rmtree().

After removing these directories, I could see a significant drop in the number of files and directories:

$ python drive_analyzer.py all files dirs: 142,159

Virtual environments have a lot of files; deleting three of them got rid of almost 15,000 files.

What about __pycache__ directories?

There are a number of other files you don't need to keep around in archives of Python projects. Let's look for __pycache__ directories:

pycache_dirs = list(path_ext_drive.rglob("*pycache*"))

I found 206 __pycache__ directories in the archives. Removing these brought the total count of items in the archive down to 141,447. After removing the __pycache__ directories, I found 262 remaining .pyc files. I didn't find any .pyi files.

Putting some guardrails up

At one point I asked GPT for some suggestions about what kind of files I might want to look for, that don't need to be kept in an archive. I mentioned that I'd done work in Python, Java, JavaScript, Bash, and a bunch of other languages over the years. One of the suggestions was to look for directories named target/, which it said were common in Java projects.

I ran the script looking for target/ directories, and accidentally left in place the code that would delete these directories as soon as they were found. Fortunately that version also printed the path to each file that was deleted, and I was able to verify that those were all directories I didn't need.

However, I decided to put some guardrails in place so I wouldn't accidentally delete something I wanted to keep. I added a few CLI args:

parser = argparse.ArgumentParser() parser.add_argument("--delete", action="store_true") parser.add_argument("-v", action="store_true") parser.add_argument("--dirs", action="store_true") parser.add_argument("--files", action="store_true") args = parser.parse_args() # Pause to allow canceling here: if args.delete: print("Deleting data in 3s...") time.sleep(3) ...

I wasn't trying to make a script I could share widely. I was just trying to put a few flags in place that let me run the script in an exploratory way most of the time, but also do some automated deletion when I was sure that's what I wanted to do. This is one of the points I hope people take away from this post: if you're familiar with modules like argparse, you can quickly build your own "throwaway" CLI tools for small but important tasks.

I added a bit of code so the script would only delete data if the --delete flag was explicitly included. If that flag was used, I'd also see a 3-second warning. This was enough time to quickly hit Control-C if I hadn't actually meant to delete files. I felt much safer running the script in an exploratory manner after this.

A bunch more unneeded files

I found a lot more files I didn't need to keep around, by just entering various patterns in the call to rglob(). I looked for temp files ending in ~, and a variety of other formats. I found some build/ and dist/ directories, and a number of folders containing libraries that I'd never need again, or could download if I did need them. I found some .log files, a bunch of .DS_Store files, and many other dot files I'd never need again. I also deleted old etc/, local/, and var/ directories from various Linux systems I had backed up over the years.

After all this work, the archive was down to about 112k files and folders. I should clarify that my goal was not so much to save space in the archive, but more simply to reduce the clutter in the archive. An archive with only files you might actually want is much more usable than one that has everything you've ever thrown into a junk drawer.

Duplicate images

The last thing I was interested in was deleting duplicate files. I knew I had some redundant photo archives from multiple migrations across laptops and external drives over the years.

You might have heard the term deduplication; it's much different in the corporate world than in a personal archiving workflow. For example, GitHub doesn't want to store 100,000 copies of Bootstrap. If they detect it in one of your older repositories that doesn't change very often, they replace your copy of Bootstrap with a pointer to a centrally-stored version of Bootstrap.

In my case, I just wanted to find out where my redundant directories are. I don't really care if some photos are stored in multiple places; that's quite common. For example I have a bunch of photo dumps from different cameras, and some of those photos have been copied into curated collections. I still want the originals in the dump directories, and the copies in the curated collections. But if I have entire photo dumps in multiple places, or redundant versions of the same collections, I want to know about those.

How many image files?

First, I was curious to see how many image and video files I had in the archive. Here's a block that gave me a rough sense of what I had:

file_exts = [".jpg", ".mp4", ".xcf", ".avi"] file_paths = [ path for path in path_ext_drive.rglob("*") if path.suffix.lower() in file_exts ] print(f"Found {len(file_paths):,} files.")

This block looks at all files in the archive, rglob("*"), and then keeps only the ones with an extension listed in file_exts. Running this code, I found almost 26,000 files with these specific extensions.

We can use set() to find out how many of these files have unique names:

unique_names = set([p.name for p in file_paths]) print(f"Unique names: {len(unique_names):,}")

This showed just over 12,000 unique filenames. That gives a lower bound on the number of unique files, but it doesn't actually tell us much about duplicate files. For example there are a number of unique video files named VID00001.MP4, because I never went back and renamed those files.

Finding duplicate directories

I was much more interested in finding duplicate directories than duplicate files. I wanted to know where I had backed up the same sets of images or videos, or any kinds of documents, multiple times.

To find these directories, I decided to build a dictionary called contents_dirs:

contents_dirs = {} for dir_path in all_dirs: contents = frozenset( path.name for path in dir_path.rglob("*") ) if contents not in contents_dirs.keys(): contents_dirs[contents] = [dir_path] else: contents_dirs[contents].append(dir_path)

This code sets up an empty dictionary, contents_dirs. It then looks at a set of directories that have been filtered to include directories that only contain files, with no nested directories.

It then builds a generator for all the names of every file in the current directory:

path.name for path in dir_path.rglob("*")

It then creates a frozenset from these filenames. A frozenset is like a tuple; it's a set that can't be mutated. That means we can use it as a key in a dictionary.

If the contents of the current directory aren't already being used as a key in the contents_dirs dictionary, we add a new key-value pair. The key is the contents frozen set, and the value is a list with just the current directory path in it.

If this set of contents is already a key in the dictionary, we append the current directory path to the list of directories that match that set of contents. These should be redundant directories.

The first time I ran this code, I was curious to see how many redundant directories it would identify. I was quite surprised to see over 3,200 identical directories, and assumed I had made some kind of logical error. It turns out Git has an objects/ directory, where the contents are a bunch of folders, many with one file each. The folder name and filename combination represents a hash of a specific object in the repository. Two objects referring to the same file will have the same folder name and filename combination. So. for example, any repositories that had a CSS file from Bootstrap would have some matching directories.

After excluding .git/ dirs and a number of other kinds of directories that had good reasons to be redundant, I had a couple hundred identical directories. I generated some output that showed which directories were likely to have matching contents:

$ python dir_deduplicator.py all files dirs: 103,616 Found 17,193 directories. Found 15,608 dirs with no nesting Found 2,070 dirs outside of .git/ dirs Found 200 sets of matching dirs. Matching dirs: old_hdd_backups/.../old_photos/Christmas 2008 old_hdd_backups/.../pangolin_archive/photos/Christmas 2008 Matching dirs: ...

This is exactly what I was hoping to see. As an example, I had redundant copies of pictures from "Christmas 2008". That redundancy across drives was a good thing. But now that I'm reorganizing my archive I don't need redundancy within the archive. Also, this approach identified directories that had identical contents, but different directory names.

I didn't do any more automated deletion. I just opened two Finder windows side by side, looked at both of these directories, and chose which one to keep. Every time I re-ran this code, I could see the number of redundant directories dropping. In the end I went from about 25,000 images to about 20,000 images. Most importantly, I ended up with an archive that's much more organized and usable.

Wrapping up

When I was finished getting rid of duplicate directories, I was curious to see what I was left with. I ended up with just over 90,000 files. About 20,000 of those are images or videos; that's not too surprising for 15+ years of digital images.

Interestingly, almost 50,000 paths in the archive have .git somewhere in the path. I'm quite fine to keep those files around; they don't take up a whole lot of space, and I know exactly what those files are there for. It's really nice to know I can drop into any project from my last 15 years of programming, and see that project's entire history with a single git log command. Git really does feel like magic across a period of decades.

Conclusions

If you've got a pile of drives in a closet somewhere, I highly encourage you to move them all onto a handful of newer, larger drives. I encourage you to use tools like pathlib to explore your archive, and to make decisions about how to manage it in a meaningful way going forward.

My archive is much more usable now. It's all in one place, which makes it easier to poke around and find old work and images I'm interested in. 2 Whenever I want, I can easily go in and do some more cleanup. For example, I'll probably reorganize the entire archive at some point into a simple set of projects/, images/, videos/, and documents/ directories. That work will be much more manageable now that I know most of the redundancy and cruft has already been removed.

If you're currently backing up your virtual environments, you should probably stop doing so. Virtual environments are meant to be "disposable", because you should be able to recreate them at any time. They're also not portable, so it's quite likely a backed-up virtual environment won't actually be usable.

How to exclude virtual environments from backups depends on your backup routine, and how you build your environments. You'll typically specify something like .venv/ in an ignore file, or an excludes file.

Each environment includes ~10k files, so it's worth figuring out how to exclude them from your backups.

The archive is on two identical drives, which I periodically rotate. I keep one connected to my main computer for a while, running regular backups. After a week or two, I swap it out with the other drive. They stay mostly consistent, never getting out of sync by more than a week or two.