Grounding yourself as a programmer in the AI era, part 6

MP #28: Reflections on the impact of AI on programming and programmers.

Note: This is the final post in a 6-part series about starting to integrate AI tools into your programming workflow.

The programming world has shifted in the last six months. We’ve been building and using steadily more useful tools for decades, but the advent of AI assistants is a much more significant change than we’ve seen in a long time. This series is part of an ongoing attempt to take a grounded look at what’s changing, what’s staying the same, and what we should all be doing about it.

Between two extremes

No one knows exactly what programming will look like in the next one to five years. Anyone making claims with any degree of certainty about what’s to come is almost certainly overconfident, or trying to sell something. It’s helpful to set up some boundaries about how much things are likely to change. The two extreme takes are simple:

No one will need to program anymore because AIs will do everything.

Nothing is changing because these tools are not actually all that useful.

I feel quite comfortable asserting that reality lies somewhere between these two takes. Yes, it’s still very much worth your while to continue learning about programming. And yes, things really have changed in a fundamental way.

Reflections on building py-image-border

In the previous posts in this series I wrote a small tool to add borders to images, because I’ve been needing to add borders to screenshots lately and macOS doesn’t have a built-in tool for this. I wrote the initial working version by hand and then went through a refactoring process with and without an AI assistant. In retrospect, this has been a really helpful exercise in assessing the impact of AI tools.

[Some] Code for everyone?

One of the clearest takeaways is so profound, it still makes my head spin when I stop to think about it. py-image-border is exactly the kind of small tool that has been trivial for a reasonably competent programmer to build, because there are so many good third-party packages like Pillow available to work with. It’s also the kind of utility program that has historically been completely out of reach of non-programmers. It’s almost always too expensive for non-programmers to pay someone to build a tool like this, even though it’s not much more than an afternoon project. But, this is the kind of tool that anyone with access to GPT can now easily build.

The focus of this series was on refactoring, but I ran a quick test to see what GPT would have come up with on its own, given nothing more than a request for code that would add a border to an image. The code that GPT generates didn’t match what I came up with exactly, and I have mixed feelings about how much to use GPT-generated architecture, but the bigger takeaway is that GPT is capable of generating fully-functional nontrivial programs.1 And if you have no programming background at all and tell GPT that, it will tell you step by step what to do in order to make use of the code on your own system. You need to be able to write clearly in order to get GPT to generate what you want it to, but for a whole class of smaller problems you don’t need to understand the actual code that's generated.

PyCon conversations

I just got back from PyCon, and one of my clearest goals this year was to get a sense of what people are actually experiencing related to AI, away from the hype of marketing and blog posts. This refactoring experiment was helpful in talking clearly with people about the effectiveness of the AI tools that are available today.

I was surprised to find more people who seemed to underestimate the impact of AI tools than people who were overestimating their impact. I think this comes from people finding small things that AI tools either get wrong, or are inconsistent about. People then use those failures or inconsistencies as a basis for dismissing AI tools as nothing more than hype.

Takeaways from a more complex use case

I have a quick example of the kind of experience and mindset that seems to be leading many experienced programmers to dismiss AI too easily. I’m working on a more complex project, django-simple-deploy. This tool automates Django deployments to a variety of platforms. It runs on the user’s local system, and configures their project for deployment to the platform of their choice.

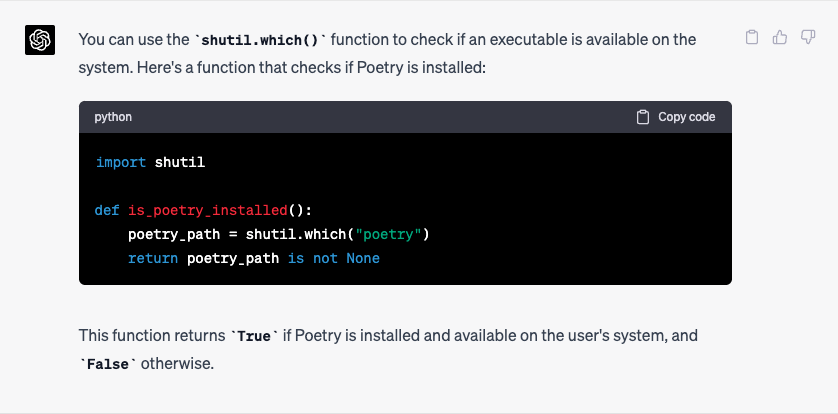

Because it works on the user’s system, simple_deploy needs to support whichever package management system the person is using: a bare requirements.txt file, Poetry, or Pipenv for now. My approach to checking whether Poetry is installed on the user’s system wasn’t working well, because running poetry —-version can generate different output on different systems and different output depending on which directory you run it from.2 I asked GPT if there was a better way to find out if Poetry is installed, and it suggested this much better approach:

def is_poetry_installed():

poetry_path = shutil.which("poetry")

return poetry_path is not NoneThis is fantastic: it’s cross-platform, and it just looks for the availability of the poetry executable instead of trying to parse CLI output.3

I then asked it how to detect if Pipenv is installed and it gave me a much longer, clunkier approach. Its solution was worse than what I had come up with. After a little back and forth, it turns out the same one-liner using shutil.which() works for Pipenv. GPT said that it first suggested a longer approach with Pipenv because it was “trying to provide a thorough explanation”. These inconsistencies seem to put some people off from seeing any real value in these tools.

The uncomfortableness of non-determinism

For anyone unfamiliar with the term, a deterministic system is one that always gives you the same result when you provide the same input. GPT, and other generative AI tools, are decidedly not deterministic. I think this makes a lot of experienced programmers uncomfortable. We've gotten so used to deterministic systems, and so wary of non-deterministic systems, that many programmers simply dismiss any tool that is not immediately consistent.4 This is especially true if you ask GPT a question about a complex project, without providing it enough context.

A lot of people in the programming world seem to be having a hard time wrapping their heads around the effectiveness of a non-deterministic tool.

I think there’s one other aspect of people’s dismissal that’s worth mentioning. Many programmers have built relatively nice lives for themselves on the basis of being able to think logically and analytically. Being rational and analytical becomes part of people’s identity. Some people seem to take this a little too far, to the point of being dismissive of any person or system that seems less logical. A lot of people in the programming world seem to be having a hard time wrapping their heads around the effectiveness of a non-deterministic tool. The fact that this tool, and the people who use it well, may threaten their jobs is a complicating factor in all this. (This perspective was not clear to me from online-only interactions. This was only apparent after a bunch of in-person conversations over the course of a week-long conference.)

Back to the value of py-image-border as an example

When talking to people about such a significant but abstract issue, it’s helpful to have a concrete example to focus the conversation. The py-image-border project was a perfect example for having grounded conversations with people. When people hadn’t used AI tools at all, or had used them briefly but already dismissed them, I shared a brief summary of this project. The main point I shared was that a skill that used to be exclusive to programmers, the ability to make a small utility that lets you work more efficiently, was now available to anyone with access to GPT. No-code tools have overpromised their capabilities for decades. I think a good take on AI is that we finally have a no-code, natural language tool for doing very general work. It’s truly incredible, and having a concrete example of something that was impossible for non-programmers just six months ago, but easy for them today, made people stop and reconsider their assessment of the impact these tools will have.

Less hype than expected

Over a week of in-person conversations, including many conversations with the people who are actually building these tools, I heard way less hype than I expected. I think there are two reasons for this. For one thing PyCon tends to draw people, including sponsors, who don’t try to oversell the capabilities of what they’re offering. There are enough informed people around that you’d look silly pretty quickly if you oversold your company’s product too much.

But, much more importantly, the people who are actually building and using AI tools on a daily basis don’t need to hype anything. They know these tools aren’t perfect, but they also know that they and many others are getting really important things done using them. They see the flaws in the models, and they know how to work around them and sometimes with them.

I don’t love AI

Please don’t get me wrong. I'm firmly in the camp now that believes AI tools are incredibly helpful. But, I don’t blindly love them. They are already disrupting many people’s lives for the worse, and they will continue to do so for some time yet. For many reasons, however, this is a genie that can not be put back in the bottle. With this practical mindset, there’s not much left to do but make sense of these tools, and start to use them appropriately.5

Conclusion

My conclusions from this experiment and from watching this space for another six weeks are quite clear. When you have the chance, start to explore AI tools in your own ways. Don’t just copy and paste what they first give you, and be careful about dismissing their missteps too quickly. These tools are highly capable because they make mistakes and focus on wrong ideas sometimes. If you keep steering them in the right direction, you’ll almost certainly work at a higher level than you could without them.

At the same time, continue to learn from reliable sources. Read books, newsletters, blog posts, and documentation. Watch videos and talks. Speak with your colleagues, and definitely ask people who aren’t focused on tech about their experiences with AI as well. AI tools are part of today’s world, not tomorrow’s. The sooner you can make sense of their capabilities and their limits, the better you’ll be able to navigate our rapidly-changing world.

Many people are trying to make sense of AI tools. If this post has been helpful to you, please consider sharing it.

If you’re a programmer, I think it’s good to at least sketch out the architecture you think might be appropriate for a new project. If GPT gives you something better than that, run with it. If not, steer GPT toward an architecture you know will support your long term vision for the project. ↩

I believe I’m reporting this accurately. I ran a simple command, which I believe was

poetry —version. In one directory it gave me the expected output, but in a different directory I got a message about what it found in that directory’s pyproject.toml file. The larger point is that my approach was brittle, and the approach that GPT suggested was simpler and more reliable. ↩This may need to be modified if the user doesn’t have

poetryon their path, but it’s still a much better approach to build on than what I was trying to do. ↩I took this stance in an earlier post as well, but that was before GPT-4 was widely available. ↩

The current capabilities we’re seeing can’t be walked back. It turns out that while it was extremely expensive to develop the first truly capable models, it’s much cheaper than most people thought to build on what’s already been achieved. People are running increasingly capable models on smaller and smaller systems, including phones and Raspberry Pis.

We can consider regulating how AI tools are used, and what they’re connected to. We can try to regulate the development of next-generation expensive-to-build models, but we can’t realistically take back what’s already been put out there. ↩