Exploring recent Python repositories

MP 34: Using the GitHub API to find newer Python projects that are starting to gain popularity.

Note: I’ve been busier than usual over the last few weeks, so the series about OOP (object-oriented programming) will begin in July instead of this month. If you have any questions or topics you’d like to see addressed in that series, please feel free to share them here.

GitHub has a really responsive Search page, but you can also explore the full set of public repositories through its API.1 In this post we’ll use the API to find some of the most popular newer projects on GitHub. This can be an interesting way to see what kinds of projects people are building with Python. It can also be a good way to find projects to either use in your own work, or consider contributing to.

In the next post, we’ll focus on brand-new projects that haven’t yet achieved any popularity. There’s just as much to learn from looking at those kinds of projects.

I’m going to do this work in a Jupyter notebook, because that will make it easier to explore the repositories that are returned. You can find the full notebook here; if you run the notebook you can click on any of the repositories that are returned by the queries that follow.2

The most popular Python projects over the last year

To start out, let’s make a request that answers the following question:

What are the most popular Python projects that were created in the last year?

To answer this question, we’ll write a query that returns all the Python projects that were created in the last year, and have accumulated at least 1,000 stars.3

The first cell imports requests, and sets the headers that are needed for the API calls:

import requests

# Define headers.

headers = {

"Accept": "application/vnd.github+json",

"X-GitHub-Api-Version" : "2022-11-28",

}These headers indicate that we want the results returned as JSON, and specify the current version of the API.

Next, we’ll write a function we can use to issue a request for a specific query:

def run_query(url):

"""Run a query, and return list of repo dicts."""

print(f"Query URL: {url}")

r = requests.get(url, headers=headers)

print(f"Status code: {r.status_code}")

# Convert the response object to a dictionary.

response_dict = r.json()

# Show basic information about the query results.

print(f"Total repositories: {response_dict['total_count']}")

complete_results = not response_dict['incomplete_results']

print(f"Complete results: {complete_results}")

# Pull the dictionaries for each repository returned.

repo_dicts = response_dict['items']

print(f"Repositories returned: {len(repo_dicts)}")

return repo_dictsThis function issues the actual request. It displays the URL, and shows the status code as well. It then converts the JSON response to a Python dictionary, and prints the total number of repositories.

The API returns a value labeled incomplete_results. Printing the inverse of this lets us know whether we got a complete set of results. The code for this looks a little confusing, but it results in more readable output: True will indicate that we did in fact get a complete set of results. You can get incomplete results if your query takes too long to run, for example if a large number of repositories match your query parameters.

The function returns a list called repo_dicts. This is a list of dictionaries, where each dictionary contains information about a single repository.

The last function we need summarizes information about a set of repositories:

def summarize_repos(repos):

"""Summarize a set of repositories."""

for repo in repos:

name = repo['name']

stars = repo['stargazers_count']

owner = repo['owner']['login']

description = repo['description']

link = repo['html_url']

print(f"\nRepository: {name} ({stars})")

print(f" Owner: {owner}")

print(f" Description: {description}")

print(f" Repository: {link}")This function takes in a list of dictionaries representing repositories. It loops over the dictionaries, and prints selected information about each repository. We’ll see the repository name, how many stars it has, who owns it, a description, and a link to the repository.

Now we’re ready to issue a query:

url = "https://api.github.com/search/repositories"

url += "?q=language:python+stars:>1000"

url += "+created:2022-06-01..2023-06-01"

url += "&sort=stars&order=desc"

repo_dicts = run_query(url)

summarize_repos(repo_dicts)This URL is a query that searches over all the repositories on GitHub. It selects only repositories whose primary language is Python, and have over 1,000 stars. It only includes repositories created in the last year, and the results will be sorted by the number of stars in descending order:

Query URL: https://api.github.com/...

Status code: 200

Total repositories: 386

Complete results: True

Repositories returned: 30

Repository: Auto-GPT (138883)

Owner: Significant-Gravitas

Description: An experimental open-source attempt to make GPT-4 fully autonomous.

Repository: https://github.com/Significant-Gravitas/Auto-GPT

Repository: stable-diffusion-webui (82609)

Owner: AUTOMATIC1111

...There are only 386 Python repositories created in the last year that have over 1,000 stars. This is a complete set of results, and information about 30 of these repositories was returned in this response.

Unsurprisingly, almost all of these projects are related to AI. I’m interested in using AI to write code more efficiently, but I’m not particularly interested in working directly on AI-focused projects at this point. Let’s see if we can find the non-AI projects that people are working on.

Excluding AI-focused projects

You can add some logic to the query to filter out repositories meeting certain criteria. Let’s modify the query to exclude repositories containing some of the most common AI-related terms in the project name or description:

url = "https://api.github.com/search/repositories"

url += "?q=language:python+stars:>1000"

url += "+NOT+gpt+NOT+llama+NOT+chat+NOT+llm+NOT+diffusion"

url += "+created:2022-06-01..2023-06-01"

url += "&sort=stars&order=desc"

repo_dicts = run_query(url)

summarize_repos(repo_dicts)The API limits you to five logic-related filters. Including these filters gets rid of a lot of the AI-related repositories, but they still make up a significant percentage of the results.

To get rid of even more AI-focused projects, let’s write a function that filters out repositories based on some additional terms:

def prune_repos(repos):

"""Return only non AI-related repos."""

ai_terms = [

'gpt', 'llama', 'chat', 'llm', 'diffusion', 'alpaca',

' ai', 'ai ', 'ai-', '-ai', 'openai', 'whisper',

'rlhf', 'language model', 'langchain', 'transformer', 'gpu',

'copilot', 'deep', 'embedding', 'model', 'pytorch',

]

non_ai_repos = []

for repo in repos:

# Check for ai terms in name, owner, and description.

name = repo['name'].lower()

if any(ai_term in name for ai_term in ai_terms):

continue

owner = repo['owner']['login'].lower()

if any(ai_term in owner for ai_term in ai_terms):

continue

# Prune repos that don't have a description.

if not repo['description']:

continue

description = repo['description'].lower()

if any(ai_term in description for ai_term in ai_terms):

continue

non_ai_repos.append(repo)

print(f"Keeping {len(non_ai_repos)} of {len(repos)} repos.")

return non_ai_reposThis code could certainly be more efficient and concise, but in exploratory work I usually prioritize readability and don’t do a whole lot of refactoring.4 We start with a list of AI terms, which I’ve come up with by looking at the kinds of repositories I want to filter out. We make an empty list, non_ai_repos, where we’ll store the repositories that pass the filter. Then we loop over the repos, and continue the loop whenever we detect an AI-related term in the repository name, owner’s name, or description. We also prune any repos without a description, to avoid an error when comparing the search strings against a description that is actually the None object. We’re also not going to learn much by looking at repositories that don’t have a description.

Any repository that passes all these checks is added to non_ai_repos, which is returned at the end of the function.

We can also increase the number of repositories that we’re getting detailed information about, from the default of 30 to a max of 100:

url = "https://api.github.com/search/repositories"

...

url += "&sort=stars&order=desc"

url += "&per_page=100&page=1"

repo_dicts = run_query(url)

pruned_repos = prune_repos(repo_dicts)

summarize_repos(pruned_repos)The per_page parameter tells GitHub how many results to return for this API call, and the page parameter tells it which page of results to return. After running the query we call prune_repos(), and then summarize the projects that remain after the pruning process.

The pruning process leaves 36 repositories:

Query URL: https://api.github.com...

Status code: 200

Total repositories: 203

Complete results: True

Repositories returned: 100

Keeping 36 of 100 repos.

Selected information about each repository:

...There are still a number of AI-related projects in these results, but there are enough non-AI projects here that this is good enough for my purposes. If you wanted to take this further you could continue to add terms to the pruning filter. You could then request additional pages of results, and compile a longer list of non-AI focused repositories to look through.

Looking at the projects

There are some interesting projects in here. Feel free to look through the full set of results, but here are some of the projects that stand out to me:

pynecone

Pynecone is a newer framework for building web apps. Django and Flask have been the dominant web frameworks for a long time, and if you’re looking for stability you won’t go wrong with either of those. But people are constantly looking at new ways to build modern apps and websites. Pynecone is one of the more prominent new frameworks, and if you’re interested in web development you might be curious to check it out.

Monocraft

If you like Minecraft, Monocraft is a programming font that’s inspired by the Minecraft typeface.

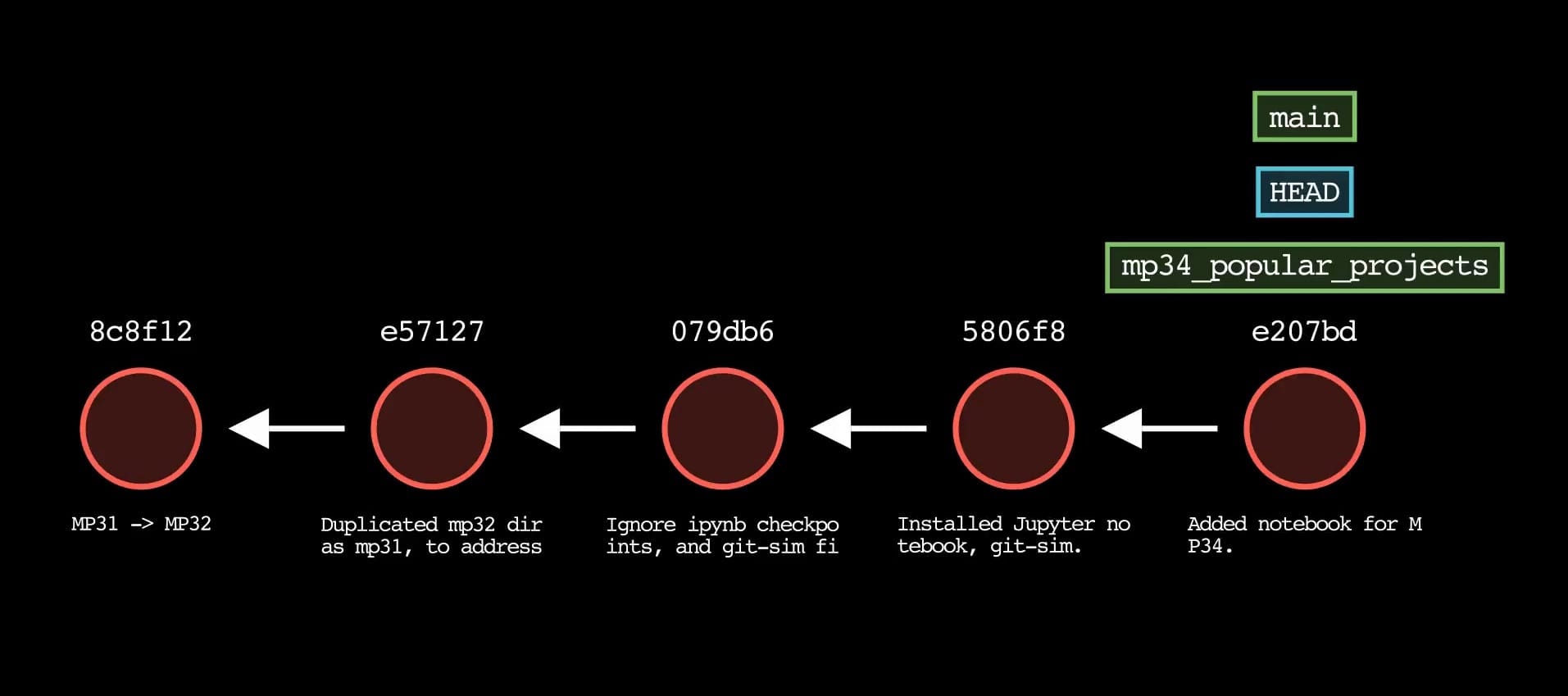

git-sim

I’m really intrigued by this project. git-sim generates visualizations of Git commands against your own projects. For example if you want to see what effect a merge operation will have on your project, you can run git-sim merge <branch> and it will generate a visualization of what that merge looks like using your actual commits. This looks like a fantastic project for people who would like to better understand how Git works, and for experienced people to understand what’s happening in a more complex project. To read more about git-sim, see the author’s blog post introducing the project.

Manim

This project was not in the list of repositories returned by GitHub, but I learned about it when looking at the dependencies for git-sim. Manim is a library used for generating mathematical animations. git-sim can generate static images of Git operations, but it can also generate animations of those operations. It uses Manim to generate these animations. This looks like a fantastic library, and it’s a community version of the software that Grant Sanderson uses when making animations for 3Blue1Brown. (If you haven’t heard of 3Blue1Brown before, go watch one of his videos!)

software-papers

This repository contains a curated list of papers that are of interest to software engineers. The papers aren’t specific to Python; this repository shows up in the query results because Python is used to generate the Readme file, and to check the links in the file. If you haven’t made a habit of reading papers, you might find something interesting in this list.

twitter-archive-parser

Twitter hasn’t died yet, but it’s certainly a different place than it was a year ago. If you still haven’t downloaded an archive of your Twitter data, you might want to do so. I haven’t tried it, but it looks like twitter-archive-parser helps you look through your archive once it’s downloaded. This is the kind of project that tends to be brittle, but even if it doesn’t do exactly what it says there are still a bunch of relevant resources for getting and working with your Twitter data.

refurb

Refurb acts on an existing Python codebase. It examines your code, and if there’s a more modern way of doing something it will rewrite your code to use the newer approach. For example if you read a file using a context manager, it will replace that with a simpler path.read_text() call. You should certainly audit a tool like this before using it, and make sure you can easily roll back any changes you don’t like. That said, tools like this are fantastic for helping you work with older codebases. I have a number of stale projects I’m curious to try this project against.

Contributing to a project

Many people talk about the benefits of contributing to open source projects, but it can be hard to find projects that match your current interests and skillset. It can also be difficult to begin contributing to long-established projects where many of the open issues require a deeper understanding of the inner workings of the project.

Most of the projects here have been created in the last year. Even though they’ve already become popular, they may well have a bunch of open issues that are more accessible than the issues you’ll find on more mature projects. These projects also have newer teams, which can make it easier to begin making more significant contributions. Just in writing this post, I was able to find and report a bug in git-sim, which the owner addressed that same day. I may begin contributing to this project more significantly by helping to get testing off the ground.5

Conclusions

This post has been a fun way to play with the GitHub API, and explore some recent Python projects as well. I hope you’ve found something interesting that you weren’t already aware of, either in the code used to find these projects or in the projects themselves.

In the next post, we’ll look at some of the most recent projects that haven’t achieved any large-scale visibility yet. This turns out to be just as interesting as looking at the most popular newer projects.

Resources

You can find the code files from this post in the mostly_python GitHub repository.

The story of how GitHub built such a responsive Search page is really interesting. There’s an excellent post on the GitHub blog about their process, as well as a talk covering the same material. ↩

I’m using simple code blocks to show the code that’s run in the notebook cells, because I really don’t like using screenshots of code in a post. I hope that Substack improves the formatting available in its code blocks before long.

The following commands will run the notebook from this post locally on a macOS or Linux system:

$ mkdir popular_repos $ cd popular_repos $ python3 -m venv .venv $ source .venv/bin/activate (.venv)$ pip install notebook requests (.venv)$ curl https://raw.githubusercontent.com/ehmatthes/mostly_python/main/mp34_popular_projects/recent_popular_python_repos.ipynb --output popular_repos.ipynb (.venv)$ jupyter notebookThis will launch a Jupyter notebook session, and you can click on the notebook from this post. You’ll be able to click on links to the repositories that are returned, and you can clear all the output and re-run the cells to generate fresh data. If you’re on Windows the individual steps are slightly different, but this same process should work.

If you don’t have

curlinstalled, you can download the notebook from here, and copy it to the appropriate place on your system. ↩When evaluating which third-party projects to integrate into a project you’re working on, make sure you look at more than just the number of stars. You should also consider things like how actively the project is being maintained, what the open and closed issues look like, the quality and accuracy of the documentation, and more. ↩

This is especially true when writing for an audience of varying skill and experience levels, and when writing code that will be read in email clients. For example, I prioritize shorter line lengths, which often means defining more temporary variables than I’d normally use. ↩

I ended up submitting a PR for the initial test suite for git-sim, which was merged after finalizing this post. I’ve been looking to apply my understanding of testing to a wider variety of projects, so this was a really satisfying bit of work. The final PR consisted of three clean commits, but I did a whole bunch of development work to get to that clean state. ↩