ChatGPT is not a reliable teacher

MP #9: Be careful trying to learn with ChatGPT.

Note: This post was written before the release of GPT-4. Newer AI models are less likely to offer such poor examples as what’s shown in this post, and they make much better recommendations about which approach to use. That said, especially in more complex projects, they can still end up generating more complex code than is necessary, and code that is not idiomatic at all. (5/10/23)

It’s quite clear that ChatGPT and its peers are already helping many people work more efficiently. However, most of the people I know who are reporting this kind of experience are already highly skilled programmers, who are quite experienced at evaluating the appropriateness of suggestions from others. To these people ChatGPT is like a colleague who’s always ready to collaborate, has really useful suggestions most of the time, but sometimes makes really bad suggestions. If you can filter the good suggestions from the bad, you can probably work well with this kind of automated assistant.

I want to focus on how well the current iteration of ChatGPT and its peers perform for people who are newer to programming. If you’re just learning to use code to solve problems, and aren’t able to confidently assess the appropriateness of suggested solutions, are the suggestions that ChatGPT makes reliable? To answer this I’ll take the question from the last post about ChatGPT, where I asked it how to get the index variable in a simple for loop, and ask it to critique its own suggestions.

A brief context: reporting winners

I want to choose a question that has a fairly obvious correct answer, but might have some other possible suggestions as well. Simple for loops in Python don’t have an index variable, because Python automates the indexing work for you. What about the times where you want to work with each item’s index inside a loop?

Imagine a list of people who finished a running race. We want to display a number next to each winner representing the place they finished in. Here’s a simple for loop to display the winners, without any numbers:

# winners.py

winners = ["elizabeth", "ryan", "kayla"]

print("The winners are:")

for winner in winners:

print(winner.title())Here’s the output:

The winners are:

Elizabeth

Ryan

KaylaBut we’d like it to look like this:

The winners are:

1. Elizabeth

2. Ryan

3. KaylaYou could write your own counter variable to generate this output, but it would be nicer to just use the index of each item. Imagine for a moment that we don’t know how to do this already; we’ve only ever been introduced to simple for loops in Python, as shown above.

ChatGPT’s initial response

ChatGPT’s responses are highly dependent on the prompts you provide. The responses are also not entirely consistent; you can provide the exact same series of prompts in different sessions, and get quite different suggestions. I’m using phrases in this session, as someone might do who’s been using a search engine like Google for a long time.

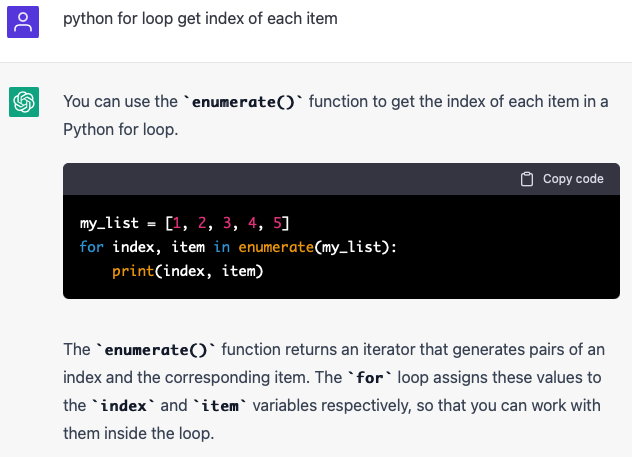

Here’s the first interaction with ChatGPT for this session:

The suggestion here works, and it’s the most appropriate solution to this prompt. Here’s our program, using this suggestion:

# winners_ranked.py

winners = ["elizabeth", "ryan", "kayla"]

print("The winners are:")

for index, winner in enumerate(winners):

place = index + 1

print(f"{place}. {winner.title()}")And here’s the output, with the place of each finisher clearly displayed:

The winners are:

1. Elizabeth

2. Ryan

3. KaylaBut what if we didn’t know that this was the most appropriate solution, and wanted to follow up on this question? We’ll do that in a moment, but first let’s talk about idiomatic code.

What is “idiomatic” code?

Once you start learning to program, you’ll hear the phrase “idiomatic code” used when talking about whether a piece of code is appropriate for a given situation. When evaluating code, we often look at a few critical factors:

Does the code work? (Does it generate the correct results?)

Is the code efficient enough for the current workload? (Does it run fast enough?)

Is the code maintainable? (Would other programmers familiar with this language understand what you’ve written?)

That last point is really important, and idiomatic code is much more maintainable than code that’s not idiomatic. Every programming language is designed in a way that its features should be used in a certain way to solve particular kinds of problems. When you use your language’s features in a way that’s suited to the given problem, we call that idiomatic code. Idiomatic code tends to be concise, easy to verify, and easy to build upon. Idiomatic code does not always look correct to someone familiar with another language.

To understand this a little better, imagine someone who’s very experienced using Java. They’ve built a number of working projects in Java, and they’ve maintained and optimized those projects over a period of time. They decide to learn Python, and because they already know how to program in another language they learn Python’s syntax pretty quickly. Often times, someone in this situation will write Python code that still looks like Java. They’re unintentionally translating the Java solutions they think of into Python syntax, instead of considering how to best solve the problem using all that Python offers. Their code is syntactically correct, but it’s not idiomatic.

To be clear, this phenomenon happens just as much in reverse. Experienced Python programmers will learn Java quite quickly, and then try to write Pythonic solutions in Java. Experienced Java programmers will review this code and find it inelegant and unmaintainable. Whatever language you’re working in, strive to learn that language’s ways of thinking, and aim to write idiomatic code with that language.

ChatGPT can be like these competent programmers who have just learned a new language. Its code is almost always syntactically correct, but can be very much not idiomatic.

A followup question

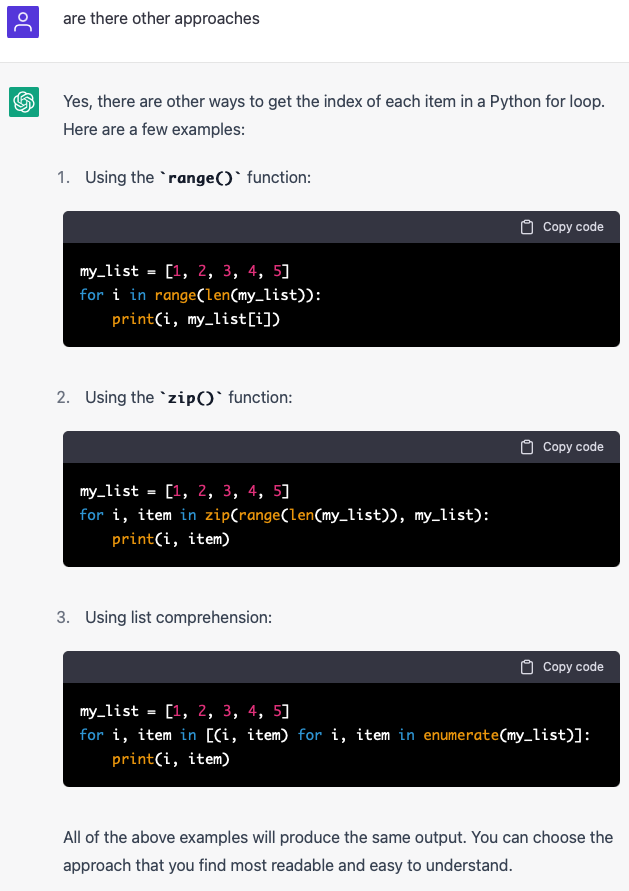

Putting myself in the mindset of a person who’s newer to Python but aware of the issue of idiomatic code, I wanted to ask ChatGPT if there are other ways of approaching this question. Here’s my followup, in the same chat session:

This is…not very good. The first suggestion, to use range(), is not appropriate at all in Python. This is the approach to looping that’s used in C-style languages. This is an approach where you need to manage the entire loop indexing yourself. Python was written specifically to get away from requiring every programmer to write this kind of code. One of my very first posts was all about how nice it was to get away from writing this kind of code.

The second suggestion, using zip(), is not much better. This is like writing your own implementation of enumerate() on the spot. zip() is a very useful function to know about, but it’s not appropriate at all to use in this situation.

The third suggestion, although syntactically valid, is utter garbage. It’s using enumerate() in a comprehension, that we then loop over to pull out the results of the enumeration. Here’s what this kind of loop would look like in our example:

for i, winner in [(i, winner) for i, winner in enumerate(winners)]:

print(f"{i+1}. {winner.title()}")How many times do we have to write i, winner in one line?! I can’t imagine any language where this is a remotely appropriate way to solve this problem. Some people do try to write overly complex code, thinking it will make it harder for their company to fire them. Maybe this suggestion will appeal to those kinds of people?

The real problem with ChatGPT’s output

The code suggestions are not the real problem with ChatGPT’s overall response. The biggest problem is its assessment of its own suggestions:

All of the above examples will produce the same output. You can choose the approach that you find most readable and easy to understand.

On the surface, this might look reasonable. The first statement is correct; yes, all of these approaches do generate the same output. The second statement is problematic because it glosses over the fact that all of these approaches will make you stand out as someone who does not really understand Python.

This is part of why we’re seeing a lot of praise for ChatGPT from experienced programmers. If you can skim all of this and quickly recognize the best approach, and not be misled by any of the other suggestions, then you might work faster with ChatGPT as an assistant. But if you’re newer to programming, considering all of these other options is not trivial, and not at all obvious. Newer programmers may think the enumerate() solution is the “most readable and easy to understand solution”, but they also might be thrown off by the use of a function in a for loop. Maybe they’ve spent some time working with a C-like language and the index-based solutions look more “readable” to them. In this case, ChatGPT is an unreliable assistant not because it’s overconfident, but because it’s too noncommittal.

It gets worse

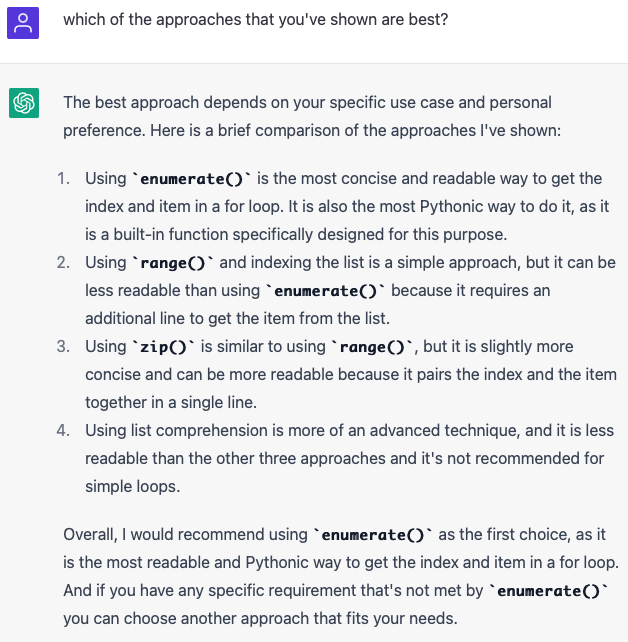

I wanted to ask ChatGPT one more followup, to see if it could clarify which is the best approach:

This comparison is pretty unsound, and potentially quite misleading. It correctly states that enumerate() is the “most concise and readable” approach, and even uses the word “Pythonic”. It also calls the range() approach “less readable”, which is an appropriate assessment.

However, it suggests that the zip() approach is “slightly more concise and can be more readable” than the previous, not very appropriate solution using range(). Making this favorable comment about the zip() solution is a stretch.

The fourth point, calling the convoluted list comprehension approach “more of an advanced technique” is entirely misleading. People newer to a language should be on the lookout for advanced techniques, so they can be aware of them and start to use them as they’re able to. This suggests that people should consider trying to understand this approach, and be ready to use it when appropriate. But this approach is literally never appropriate.

Conclusions

As I’ve said before, ChatGPT and its peers are not going away. We’re going to see more of these tools, and we’re going to see a variety of claims made about them. I think we need to be careful to help people know when to use these tools, and when to avoid getting distracted or misled by them.

I’m all for tools and resources that support independent learning. But broad claims by experienced developers that today’s AI tools can help everyone learn sound quite similar to the proclamation ten years ago that MOOCs would make all other learning resources obsolete.1 There’s a place for ChatGPT in some workflows, but people newer to programming and newer to a specific language need to be careful how they interpret the output of these assistants. Teachers should also be aware that simply giving students access to AI tools does not let them learn anything they want entirely independently. You really do need a baseline of relevant knowledge in order to make sense of the output these tools generate.

One last note, to go beyond the use of ChatGPT specifically for learning. Some people are building tools and companies based on heavy use of ChatGPT that will prove to be quite useful in the long run. I’m fairly confident in saying there are others out there who are building tools and companies that will fail quite hard, because they’re not being critical enough when working with ChatGPT’s responses.

If you’re working with ChatGPT in anything beyond an exploratory capacity, make sure you’re critically evaluating the suggestions it’s making.

Resources

You can find the code files from this post in the mostly_python GitHub repository.

Many people may not realize the level of educational trauma that exists in the tech world. I’ve spoken about education often at technical conferences, and many people have approached me to share stories from their educational experiences. For example, one person shared that they did really well in math when they started first grade. They completed their entire first grade workbook in a few days, and proudly turned it in. The teacher accused them of cheating and made them do it again. This person was surprised, but thought, “I’ll do it again, and then they’ll believe me.” They did it again, even faster because they already knew what to expect. The teacher didn’t accuse them of cheating again, but also did not respond positively. I forget exactly what the response was, but it was something that made the student repeat the same work many times, instead of letting them move on.

It’s easy to shrug this kind of experience off and say we’ve all had to deal with experiences like this at school. But when people have this experience early in life, and multiple times over the years, it can have a traumatizing impact. This is especially true if bullying is a factor in students’ lives, which it often is. I can understand why many people would be eager to see students given more independence from teachers. ↩