Time deltas are not intuitive

MP 152: But the timedelta implementation in Python is correct.

In many projects, timestamps are just a bit of metadata you might look at once in a while when troubleshooting. But sometimes, timestamps are a critical piece of information. If you handle them right, your project works. If you don't, your project falls apart, and sometimes with serious consequences.

I'm currently working on a time-tracking app. This is a perfect example of a project where managing timestamps is critical to the success of the entire project. I recently faced a bug in calculating durations, where a misunderstanding of the timedelta object led to some confusing behavior. A better understanding of how Python handles differences in timestamps led to a fix, and gave me a much better mental model of how Python works with time internally.

Establishing timestamps

In this project, I'm modeling work sessions. Every session has a start time and an end time. I like to use the prefix ts_ for everything that's a timestamp; it's a nice visual cue in a large codebase that a variable refers to an actual timestamp.

Imagine we start a work session at 8:00 in the morning. Here's the timestamp for the start of this session:

>>> import datetime as dt >>> ts_start = dt.datetime(2025, 9, 1, 8)

This creates a timestamp for 8:00 in the morning, on September 1, 2025. A quick way to verify this is to look at the timestamp in ISO format:

>>> ts_start.isoformat() '2025-09-01T08:00:00'

You can also show the timestamp any way you want using format codes:

>>> ts_start.strftime("%I:%M %p %B %d, %Y") '08:00 AM September 01, 2025'

If you don't like those leading zeroes, you can do as much work as you want when converting a timestamp to a presentable string:

>>> ts_str = ts_start.strftime("%I:%M %p %B ").lstrip("0") >>> ts_str '8:00 AM September ' >>> ts_str += ts_start.strftime("%d, %Y").lstrip("0") >>> ts_str '8:00 AM September 1, 2025'

There are all kinds of ways to build the string you want to display to end users. The point here is that there's a distinction between how a timestamp is stored, and how it's presented.

We've built some presentable display strings, but the data is still stored as a datetime object:

>>> ts_start datetime.datetime(2025, 9, 1, 8, 0)

This is exactly how time should be managed in a project; the storage of time-based data is separate from the presentation of that data.

Getting back to work sessions, let's end the session at 10:30 for a coffee break:

>>> ts_end = dt.datetime(2025, 9, 1, 10, 30) >>> ts_end.strftime("%I:%M%p, %B %d, %Y") '10:30AM, September 01, 2025'

This is a good bit of sample data to work with when first building a project. We know this session should have a duration of exactly two and a half hours.

Calculating durations

Python makes it "easy" to calculate durations, by letting you find the difference between two timestamps. Here's what that looks like:

>>> duration = ts_end - ts_start >>> duration datetime.timedelta(seconds=9000)

This is great! We subtract two datetime objects, and we get something called a timedelta object. That timedelta object seems to be represented in seconds, which is easy enough to work with. There are 3600 seconds in an hour, so we should be able to get a duration in hours by dividing duration by 3600:

>>> duration.seconds / 3600 2.5

This works, as expected. In a summary page about the work session that was just completed we can show the start time, the end time, and the duration:

>>> start_str = ts_start.strftime("%I:%M %p %B %d, %Y") >>> end_str = ts_end.strftime("%I:%M%p, %B %d, %Y") >>> duration_str = str(duration.seconds /3600) >>> msg = f"Start: {start_str}\nEnd: {end_str}\nDuration: {duration_str} hours" >>> print(msg) Start: 08:00 AM September 01, 2025 End: 10:30AM, September 01, 2025 Duration: 2.5 hours

When used in a terminal app, a GUI, or a web app, this is a nice start to a time-management tool.

Longer durations

This was all working well for me as I was starting to build my project. Most of my sample work sessions were really short, with the longest being a couple hours. I kept some sessions running over a period of days, however, and I noticed a problem. A session that spanned several days had a duration of just a few hours.

Let's see if we can recreate that. We'll start with the same timestamp as earlier:

>>> ts_start = dt.datetime(2025, 9, 1, 8)

And we'll keep the ending time at 10:30, but move it to the next day:

>>> ts_end = dt.datetime(2025, 9, 2, 10, 30)

Now the duration should be one day, plus two and a half hours. The duration should come out to 26.5 hours:

>>> duration = ts_end - ts_start >>> duration.seconds / 3600 2.5

What?! The duration is still just 2.5 hours! What's going on here?

Normalization

The key to fixing this bug is to understand how the datetime library normalizes durations. When you create a timedelta object, you can pass it any arguments you want in order to define a duration.

For example, here's how we'd manually create a duration of 2.5 hours, without reference to any start or end times:

>>> duration = dt.timedelta(hours=2, minutes=30) >>>

This line has no output, which means there were no errors; a timedelta object was successfully created, using 2 hours and 30 minutes. Let's see what was stored:

>>> duration datetime.timedelta(seconds=9000)

All that's actually stored for a duration of 2.5 hours is the equivalent number of seconds.

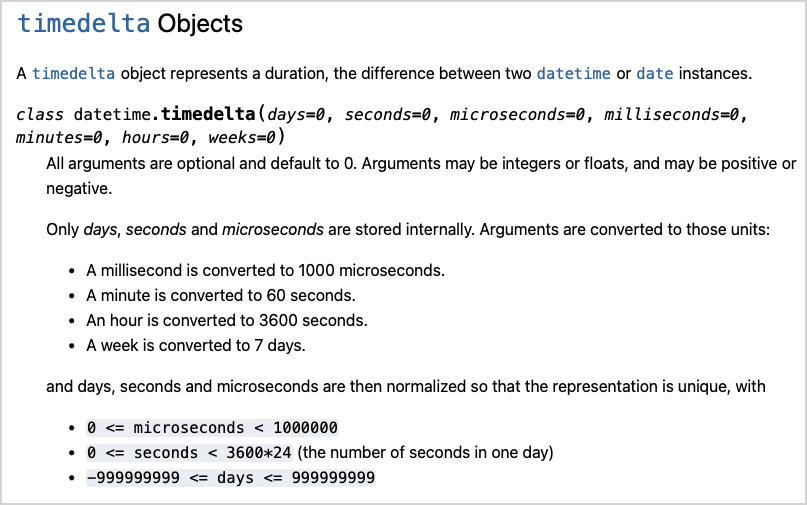

This is because of normalization. The datetime library converts all durations to a unique combination of days, seconds, and microseconds. Here's what the documentation says:

timedelta documentation is really worth reading through if you're building anything where timestamps and durations are a critical part of your work.You can use microseconds, milliseconds, seconds, minutes, hours, days, and weeks to define timedelta objects. But no matter how they're created, all timedelta instances only keep days, seconds, and milliseconds.

Fixing the bug

With a better understanding of how timedelta objects are implemented, we can fix the bug.

Let's look at that longer work session, but this time we'll take a moment to look at the actual timedelta object before converting it to our own format:

>>> ts_start = dt.datetime(2025, 9, 1, 8) >>> ts_end = dt.datetime(2025, 9, 2, 10, 30) >>> duration = ts_end - ts_start >>> duration datetime.timedelta(days=1, seconds=9000)

That's much clearer! When I saw that two and a half hours had been converted to seconds, I assumed all timedelta objects were stored as seconds. As someone who doesn't spend too much time thinking about how we represent time, that wouldn't be surprising. After all, we often hear timestamps spoken of in relation to Unix time, where every timestamp is represented as the number of seconds since January 1, 1970.

Since this timestamp is longer than a day, part of the duration is stored in the days attribute. We can get an accurate duration by including 24 hours for every day in the duration:

>>> (duration.days * 24) + (duration.seconds / 3600) 26.5

The first part of this expression handles any whole days that are included in the duration, and the second part converts partial days from seconds to hours. The parentheses aren't required here, but I like to use them for readability.

This calculation still works for durations less than a full day, because days is set to 0 in those cases.

Reading through more of the documentation, there's also a total_seconds() method you can call on timedelta objects. So, a simpler calculation for duration is this:

>>> duration.total_seconds() / 3600 26.5

This is what I originally thought the seconds attribute represented.

Why normalize timedelta objects?

Why did the people who wrote the datetime module do all this internal work to convert timedelta objects so they only store three attributes? One big reason is uniqueness. Two timedelta objects that represent different durations should be unique. But two objects that represent the same duration should be identical internally, even if different arguments were used to define those durations.

For example, there are a bunch of ways to represent two and a half hours. Consider just two:

>>> duration_a = dt.timedelta(hours=2, minutes=30) >>> duration_b = dt.timedelta(minutes=150)

Two and a half hours is 150 minutes. These two times should be equal. Let's see if they are:

>>> duration_a == duration_b True

Even though these two durations were defined using different representations of two and a half hours, they both come out equal when compared against each other. You could implement an __eq__() method that does a conversion and returns True if the durations are in fact equal, while retaining different internal representations of the two durations. But that would be quite inefficient. The datetime library doesn't care about how you represent time; all it cares about is accurately modeling and storing time-related data.

Another reason for converting all timedelta instances into consistent, unique representations is efficiency. Let's see how quickly Python builds a timedelta object representing two and a half hours, when we give it hours and minutes:

$ python3.13 -m timeit "import datetime as dt; duration = dt.timedelta(hours=2, minutes=30)" 500000 loops, best of 5: 502 nsec per loop

It takes about 500 nanoseconds to create the timedelta instance. Now let's define the same duration, using only minutes:

$ python3.13 -m timeit "import datetime as dt; duration = dt.timedelta(minutes=150)" 1000000 loops, best of 5: 383 nsec per loop

Python has less work to do here, and it creates the instance about 1.3 times faster. Now let's just define the same duration using only seconds:

$ python3.13 -m timeit "import datetime as dt; duration = dt.timedelta(seconds=9000)" 1000000 loops, best of 5: 308 nsec per loop

This is a speedup of about 1.6x.

Profiling is much more subtle than most people realize, however! Some of this time isn't really about representing time, it's about how Python handles arguments. If you use positional-only arguments, you get even more of an efficiency boost:

$ python3.13 -m timeit "import datetime as dt; duration = dt.timedelta(0, 9000)" 1000000 loops, best of 5: 230 nsec per loop

Without having to parse keyword arguments, this call is about 2.2 times faster than the original one.

Any time you're trying to optimize some code, make sure you profile your code and challenge any assumptions anyone makes about the causes of inefficiency and efficiency. The point here is that the datetime module is optimized for accuracy in modeling time-related data, and efficiency in working with that data.

Negative durations

If you've read this far, it's worth pointing out how negative durations are represented. I can't work on a time-related project without making this kind of mistake once in a while:

>>> ts_start = dt.datetime(2025, 9, 1, 8) >>> ts_end = dt.datetime(2025, 9, 1, 10, 30) >>> duration = ts_start - ts_end >>> duration.seconds / 3600 21.5

Here I've gone back to the example of a short work session that starts and ends on the same day. I've also gone back to using seconds for the moment, assuming a duration less than 24 hours. The duration here should be 2.5 hours, but it comes out to 21.5 hours! Why is that?!

I accidentally typed ts_start - ts_end, instead of the other way around. It's important to recognize that this is more than just a simple typo. In conversation, there's no difference between asking about the duration from when we started something to when we finished it, and asking about the duration from when we finished to when we started. We can easily think in terms of absolute durations.

But in programming, timedelta objects are a difference operation. This is true in most other languages as well. When you subtract a later time from an earlier time, you get a negative duration:

>>> duration = ts_start - ts_end >>> duration datetime.timedelta(days=-1, seconds=77400)

The datetime library uses the convention that negative durations are always indicated by a negative sign on the days attribute. This gives you a consistent way to check if a duration is positive or negative. However, it also means that the seconds attribute no longer represents the entire duration even for time periods less than 24 hours. The value for seconds needs to account for the negative sign in days. 77,400 seconds is 21.5 hours. The numbers in duration start to make sense if you recognize that 21.5 hours plus -24 hours is equal to -2.5 hours.

The two correct calculations shown earlier still work. Here's the calculation that uses days:

>>> (duration.days * 24) + (duration.seconds / 3600) -2.5

You'll also get the same result using the total_seconds() method:

>>> duration.total_seconds() / 3600 -2.5

When calculating the total duration, Python carries over the negative from the days attribute in the return value of total_seconds().

When dealing with time, there are a number of ways to validate that the data you're working with is correct. In my current project I have some validation that makes sure ts_end is never earlier than ts_start. But I also included another safeguard that raises an error if the calculated duration is negative.

Conclusions

Time is hard! There are way more subtleties than most people realize, until they start working with timestamps and durations in anything beyond the simplest use cases. I have a lifelong appreciation for all the people who spend time implementing, and documenting these details.

When you're working with time, make sure you read the documentation for the parts of the datetime library you're using. If you're using another library to model time, consult that library's documentation. Be really careful about rolling your own time-related logic. Consider edge cases and boundaries such as midnight, transitions between months and years, daylight savings, and others. Also, if you're presenting time anywhere, you almost certainly want to be handling timezones carefully. Finally, write tests that capture all these scenarios, even if some of them might not seem immediately relevant.